Hello,

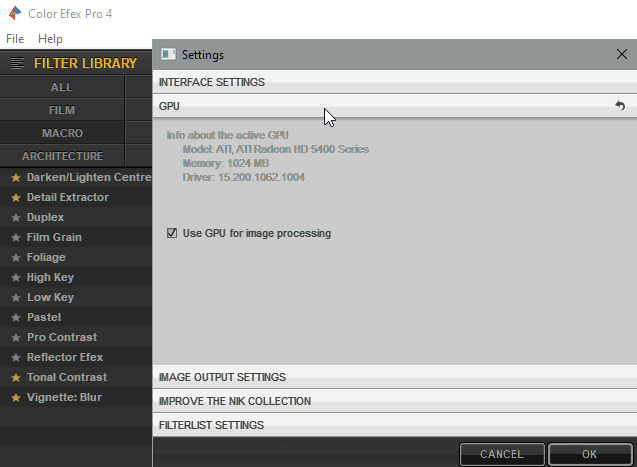

by coincidence i have seen that I can use GPU acceleration in Color Efex but not in DXO.

In DXO it’s grayed out

Yes it’s an old ATI Radeon 5400 but…???

Any ideas

Guenter

Hello,

by coincidence i have seen that I can use GPU acceleration in Color Efex but not in DXO.

In DXO it’s grayed out

Yes it’s an old ATI Radeon 5400 but…???

Any ideas

Guenter

Hello,

Of course your GPU is the same, but the software needs are not

Your GPU in CE is used differently from what PL4 does…DeepPRIME needs heavy computational power, and minimum requirements for that have nothing to do with Nik’s requirements.

My personal laptop for instance has a DeepPRIME (barely) compatible GPU, but the same GPU is not “accepted” by another software for which my GPU is too weak…

If you make an extensive use of DeepPRIME, you might consider upgrading your GPU. Even with a middle-of-the-range graphic card you can easily achieve more than 2Mpx/sec, meaning that a 40Mpx image will be processed in about 20"…

PS/ your GPU is grayed out in PL4 because it’s not compatible (AKA not powerful enough).

Steven.

but I understand

…now that Nvidia has released the new 3000 series (if you can find one), many gamers are selling their old GPU. If you are on the look for a good deal, I guess this is the right moment

@StevenL, I don’t quite understand if this is how it works on Mac. I know that the original post is for DPL(Win), still I’d like to know how things work. The settings show a greyed-out GPU:

When I process an image with DeepPrime, it takes 10 seconds with “automatic selection”, the same image takes 70 seconds when I set “only CPU” (and restart DPL).

I understand that I need macOS Catalina to switch to the GPU setting (it works as advertised), nevertheless, DPL seems to do just fine with the automatic setting. Note: I’m using Mojave.

Is there any way to find out which of the new graphics card brands are better for PL?

The new 3000 Nvidia’s or the 6000 series AMD’s?

I’m about to upgrade to either one when stock becomes available.

Regards

Aaron

Hi there,

Here is how it works: PL choses for you the best option available. If you have a compatible GPU it will use it. Having Mojave doesn’t let you manually pick a detected (and compatible with DP) GPU, but if the detected GPU is powerful enough, PL uses it nevertheless. You may ask then, why should a user manually choose his GPU if PL already does that? There are some cases where you’d like to have such an option…

For example you have 2 discrete GPUs and you might wonder if the picked one is really faster than the other, or you are having some issues with the automatically chosen GPU1 and you want to see if choosing GPU2 is going to solve the issue…and so on.

You should just trust PL and not changing that setting, it’s accurate like 99% of the time. But if you are unhappy with the automatic selection, you still have (you need Catalina for this) the option to manually chose another GPU…

Don’t worry: your AMD Radeon Pro 580X is well used by DeepPRIME, accelerating by 7x your processing time, as you have already found out.

Hi Aaron,

Our users are already sharing their results, with different GPUs, from mid-range to top-of-the-line graphic cards. Have a look at this page:

thanks for investigate, describe and share time with my little problem.

For the moment with developing a few photos a week the speed with deep prime is ok. I can’t decide to stay at windows and invest in a new gpu, or buy a new win or mac machine.

best regards

Guenter

Thanks very much Steven, very interesting and helpful. No 6000 series AMD cards yet, understandable as they are only new to the market.

Is the speed up rate in the last column the key figure that we look at? Higher is better?

Thanks

Aaron

You should look at the Mpx/sec, AKA Mpx processed per second: the higher the better.

Example, a 2Mpx/sec means that with that GPU you are going to be able to process a 40Mpx image in 20". Or a 20Mpx image in 10" and so on…

PS/ AMD 6000 series?…they only appear on YouTube videos so far

Hahah, I know!

What particular spec of the graphics cards does PL make the best use of?

Ie, amount of on-board ram? Gpu clock speed? Can’t be fp32 gflops. The older Vega 64 has a third of the RTX 3090 OC but is faster!

Thanks

Aaron

Yep the VEGA 64 seems to do deepprime rather well and better than any benchmark comparisons predict. I would love to see what RX 6x00 deepprime performance is like.

I will be getting a new high end card soon and will have to choose between RX 6k and RTX 3k series.

Well…Vega10 has always been quite strong for pure Compute Power! (a lot more then the contemporary Pascal GPUs)

It’s only hindered in that regard somewhat by Software and API support since CUDA is so popular and OpenCL doesn’t seem to work equally well.

For Ampere on the other hand nVidia heavily changed the organization of the SMs!

They basically “just” doubled the FP32 CUDA Cores per SM compared to Turing so maybe the Frontend is somewhat limiting the GA102 here.

The Peak GFLOPs have always been highly theoretical anyways, especially when comparing Cross-Generation or Cross-µArch.

(Just take a look back to the VLIW Radeons like RV870 vs. Fermi!)

It would be really awesome if the DXO developers could contribute to this question and maybe take the guess work out of the decision!