Stenis (@Stenis) I replied to an earlier post of yours in this topic and congratulated you on finding an improvement in the DeepPrime processing time between PL4 and PL5, not present with my 1050Ti & 1050 cards. I also came across your post above about the processing of your old images of Petra and the fact that the processing of photographs you took with your current A7 III took double the amount of time than “normal” images taken with that camera.

With respect to the performance improvement, I am glad that you seemed to have gained an improvement but on reflection that leaves me confused! I am confused because the first post in this thread (topic) described performance from a GTX1060 card and referred to a post elsewhere involving a GTX1080 card which actually showed a slight decrease in performance!?

The GTX 960 you used is a generation before my 1050Ti (and 1050) and faster than the 1050 but very slightly slower that the 1050Ti although in the Google DxO spreadsheet the 960 is just above the 1050Ti!? My processor(s) (i7 4790K) are a bit faster than yours so I cannot understand where your performance improvement comes from!? In fact the PL4 versus PL5 entry from @Savay for the GTX970 GPU shows a very slight improvement only (1 second for processing the test batch).

It is possible that Lucas (@Lucas) can help because he has stated that the performance improved with the RTX2000 cards onwards, i.e. with cards equipped with Tensor cores.

With respect to your photos of Petra I am glad that you managed to salvage your old images using PL5 and Filmpack (for the Kodak t-max 100 emulation). I ran a test with an image I took with a very high ISO last year (a very high ISO of 20,000 in good light because I had left the camera with the wrong settings from an “experiment” the night before) with and without the emulation and the times were essentially the same. I have attached the photo and PL5 DOP (in a zip file) with a Virtual Copy (for the Kodak Emulation) so that you can test that image on your machine if you wish.

I would guess that the increased processing time is caused by DeepPrime having to process the noise from the original media alongside the noise from the A7III. If you would like to share a single image (+DOP) via the forum or via a direct message then I will run a test on my system and see what that shows up.

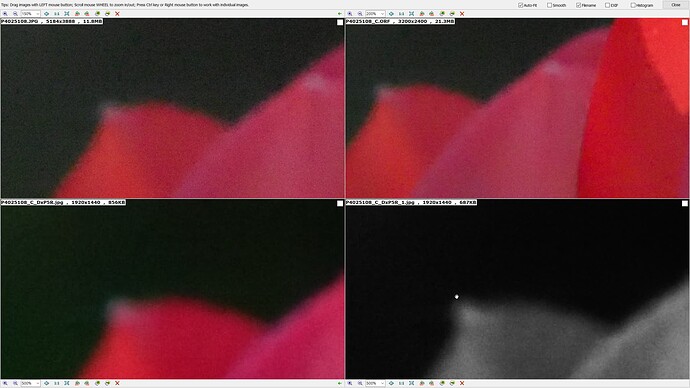

In the blog you describe the process more fully and indicate that ‘Bicubic’ may well have helped, implying that the images were resized on export. I have not applied other fixes to my tulip image as your blog suggests nor have I resized the output.

Personally I always export from PL full size and then use FastStone Image Viewer resizing to batch resize (I maintain a 1920 x 1443 library alongside the original images, they are now the only images on our NAS and provide faster access for tablets and smartphones and a much more portable library). FIV offers Bicubic, Lanczos 2 (Sharper), Lanczos 3 (Default) plus other algorithms for resizing and can be left unattended to resize large numbers of images; my personal choice for resizing has been Lanczos 2 (and given that all my images prior to 2018 were JPG I generally refrained from applying the PL ‘Lens Sharpness’ fix because that plus Lanczos 2 tended to provide “oversharp” images!) I need to revisit my strategy with respect to RAW processing e.g. use ‘Lens Sharpness’ but change to L3 or Bicubic or …for the Library images. I am sure other forum members have their own resizing favourites.

@MikeR and @Lucas if you want some “boring” general landscape photos taken while wandering a golf course with my wife during the UK lockdown earlier this year I should be able to provide 50, 100, 150 etc. RAW (20 megapixel MFT ORF) images from an Olympus EM1 MKII showing landscape shots with sky, trees, wind turbines etc., I will remove any images with people and dogs etc… This would then require a new column or additional sheet for any that want to run long batch tests.

My issues with this would be actually getting the photos uploaded “somewhere” when I have an almost non-existent upload speed (though that could be done e.g. via Flickr and then “publishing” a Flickr “album”). This then poses the logistics issue of the downloads required by those (if any) wanting to take part etc’

However, it provides a consistent batch of images (others may have better ideas with respect to how representative such a group of images would be) to compare and contrast both the GPU and processor performance and additional elements like storing such images on NVME, versus SATA SSD versus HDD and other “nerdy” issues that might well be important for tuning current hardware and when selecting future upgrades!

PL5 Release 5.0.2.zip (21.3 MB)