MouseAT I am very late to this “party” but while I welcomed improvements to the Prime processing in PL4 with the introduction of DeepPrime I “resented” having to pay for the privilege of the speed improvements particularly at the beginning of 2021 just as graphics cards had become scarce, prices had risen and even bottom end cards had become expensive!

MouseAT I am very late to this “party” but while I welcomed improvements to the Prime processing in PL4 with the introduction of DeepPrime I “resented” having to pay for the privilege of the speed improvements particularly at the beginning of 2021 just as graphics cards had become scarce, prices had risen and even bottom end cards had become expensive!

I “resented” it because I believe, rightly or wrongly, that while developing RAW photos with “big” skies the various PL4 (and PL3 before it) settings were creating noise in the clouds which Prime (and then DeepPrime) managed to remove, i.e. if I wanted the effects I had to buy a card to make render (export) times more palatable!

So I managed to purchase a 4GB 1050TI for my beta test machine (£160) and a 2GB 1050 (£110 second-hand) for my main machine. I just ran a test on the main machine and PL4 took 27 seconds and PL5 took 24 seconds to render the same photo, essentially maintaining the existing speed.

However, the current marketplace appears to have none of the low end cards at all and mostly starts at £430 for an RX6600 rising to £1700 for an RTX 3080 and £2,400 for an RTX 3090; here’s hoping the Bitcoin miners have a “rock fall/roof collapse”, to bring the price back where it should be and help save the planet from the energy being consumed !!!

If DxO are using such high end cards to make the numbers look good that is one thing but if they are completely ignoring the fact that many of the users are not using such “exotic” hardware then that is another!!

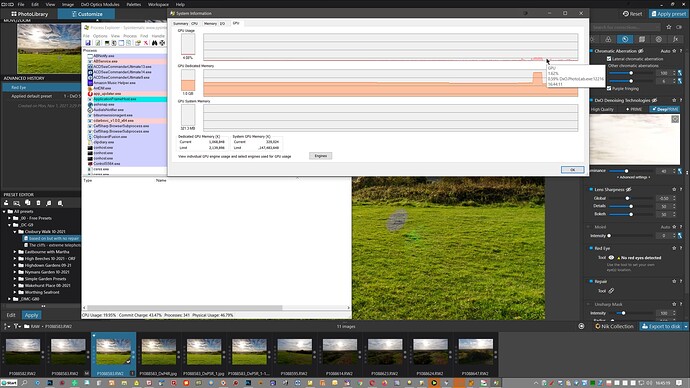

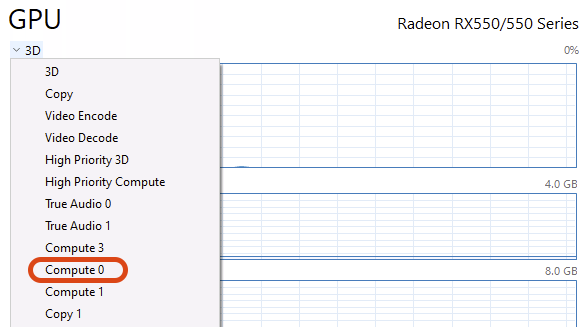

Why is so little GPU being used from my “Weedy” Graphics card?

One problem that I do have is the very small amount of graphics processor that PL actually consumes while exporting. The reason for this might have been discussed elsewhere on the forum but I would be interested to know the reason and whether the PL4 testing of graphics cards is going to be rerun for PL5 with a comparison of results between PL5 and PL4.

Make the Noise Option an Export parameter & enable “Contact Sheet” output:-

Possibly because of my physical (eyes) or mental make up (photographic retention) I cannot successfully compare images unless the transition time between them is essentially none, i.e. the time it takes to move from one image to the next in PL is too long for me to effectively compare which of my alternative option choices are better, worse of indifferent!!

This time difference is too great even between images and virtual copies. Hence, the only alternative is to export the photos to enable the comparison in a browser/editor that is not attempting to render at the same time as presenting, plus products like FastStone Viewer and FastRaw Viewer can provide multiple image comparisons, if required. Currently that means changing the Noise reduction from DeepPrime to HQ to speed up the export process and then change it back when I want to export the final JPG!

If the noise reduction process to be applied could be defined in the export process then life would be so much easier. I could create an export profile with HQ selected in addition to the one with either DP selected or where it is left blank and it defaults to the settings for the photo and I get to be able to export for review (my contact sheet if you like) before my production exports when I have decided which options selection is the “best”.

An alternative would be a smart snapshot function in PL5 itself but that is probably going too far?