Thanks, perfect! Now I’m wondering where the problem might be. I have a Sapphire Radeon RX580 and and AMD Ryzen. Maybe it’s not the driver. I believe I started having problems either from Photolab 3 to 4, but it might have been 4 to 5. It was DxO itself that told me the problem was in AMD’s driver and switching to an really old driver made the glitches go away (not the “processing errors”). But it could be an interaction with something else in the system that triggers the problem.

It would be sooooo much easier if you could borrow a higher spec graphics card and find out for sure.

I decided it was time to upgrade one of my machines, Main and Test are both i7-4790k with 24GB of memory each, SATA SSDs for booting and large quantities of HDD space. Main has a GTX 1050 with 2MB bought second-hand during the Graphics card crisis after I bought a GTX 1050Ti with 4GB just as the market dried up and fitted to it to Test.

I have been feeling that the 1050 performance has declined compared with the 1050Ti on recent releases but both are s l o w!

At the weekend we were visiting my oldest son so I took the opportunity to test with my Grandson’s machine and my Son’s machine. Both are Ryzen systems but my Son’s system is used for Architectural model rendering and my Grandson’s for gaming but now replaced by a PS/5.

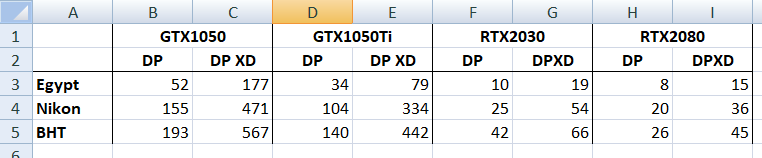

So here are some figures comparing GTX1050 2MB, GTX1050Ti 4MB, RTX2060, RTX2080 running the Egypt image and Nikon batch images and 10 images of my own (from a Lumix G9)

So do I buy my Grandson’s RTX2060 but would then have to supply a replacement graphics card or buy a new RTX2060 and sell the slower graphics card, disappoint my Grandson who “wants” the money but who might be too disappointed with the performance of the GTX1050. I feel that purchasing a new GTX2060 is probably the best course of action (and make a donation to his funds)!?

PS: Update when I wrote this I used both RTX2030 and RTX2060 when describing my Grandson’s graphics card and replaced 2060 with 2030 which was incorrect it should have been RTX 2060!

In the meantime I have ordered an RTX 3060 so I will be able to run that on my machine (if I can fit it successfully) and find out how much the faster Ryzen systems contributed to the times in the above Table!

When my old card of many years went south a few years ago I was forced to get a new card during the height of the gpu shortage. After buying a used card on eBay (NEVER buy a used video card), I was able to find at Microcenter a new AMD 6600 made by Gigabyte, with 8 gigs of onboard RAM. A very very very nice card that has not given me even a hiccup since I installed it. And it runs PL6 very quickly and smoothly. I always have PL6 and LR running simultaneously.

I have a MSI RX 6600 MECH 2X 8GB (AMD Radeon RX6600) card and PL6 runs smoothly.

Using a Nvidia 3070 and the results have been perfect.

I am exporting with DeepPrime XD for my Sony A74 lossless compressed raw at around 10 secs each photo.

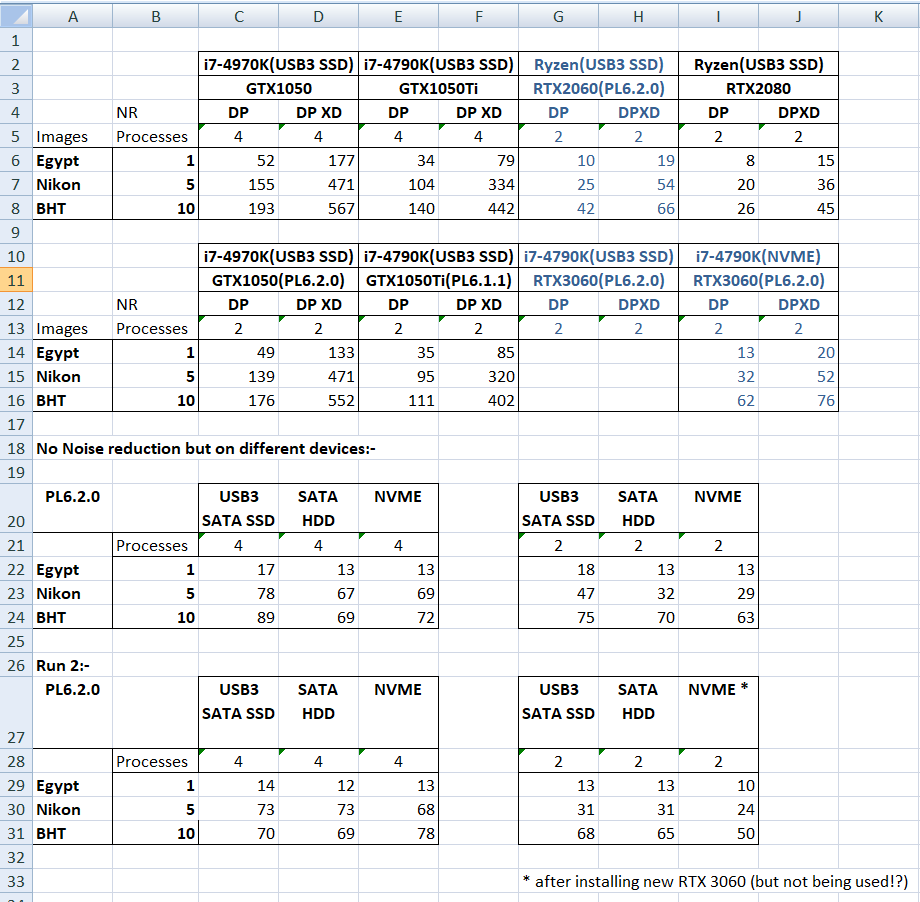

If the following is “boring” then I am sorry but it occurred to me that I had not carried out any tests on my Son’s and Grandson’s machines to try to assess how much more powerful than mine they are, i.e. run tests that should not be using the “AI” features required by DP and DP XD.

While I have not yet had an opportunity to test those machines without any noise reduction, I did that with my own two machines and have created the following, still incomplete set of tables

My tests on my machines were carried out with the ‘Performance’ ‘Preferences’ option set to 4 which seems to cause an increase in export times so I have repeated some tests with both but have standardised on 2 as being the recommended and best option. In all cases the exported files were written back to the same device but in a separate directory

Hence the lower two rows of tables are all without any selection of ‘Denoising’, two runs looking for consistency which appears to be consistently absent? The very last test with NVME was run after the installation of the new graphics card but that card should play no part in those exports and I my bios reverted to default during the installation and I have not fully restored the slight processor overclock?

I have yet to run a test with the new graphics card using the USB3 SATA SSD because the installation has “broken” the front USB3 hub (dislodged a power cable I suspect).

I was hoping that the RTX3060 would prove slightly better than the RTX2060 but the speed of the Ryzen of my Grandson’ machine must also be taken into consideration!

I have run my own tests because I feel that there are conflicting results in the spreadsheet. Some identical graphics cards, with not dissimilar machine hardware seem to produce different results (just like some of mine, oops!?)

I have the slightly older RX 480. Your RX 580 more or less is a more efficient RX 480.

There are some settings that can be tweaked that really improved the stability of my RX 480 in DxO PL without resulting in less performance or error in other applications.

First of all, disable OpenCL in the DxO PL settings. OpenCL in combination with the RX 480 has been very instable on my machine.

Next, you can change a setting in the AMD Software. I assume you have AMD Software Adrenaline Edition installed and set to English. Make the following change

- In the AMD Radeon software, go to settings (the gear icon in the upper right corner).

- Select tab Graphics

- On the bottom of the page, you’ll find a collapsible menu Advanced. Expand that

- The second the last option is GPU Workload. Default setting is Graphics. Change this value to Compute

Last but definitely not least: performance and stability differs per AMD driver release. The latest version, 22.11.2, is really instable. But the previous release, 22.10.3, turns out to be quite stable with the change made above. While typing this, I processed 19 images of my Canon EOS M50 without a problem

This sounds odd. I’m on PL 5, so I’m just using Deep Prime, but I just processed an image in 8.29 seconds, and I’m the OP with the old, slow, error-prone Radeon RX 580.

Here’s how I timed it: PL seems to spend some time setting up. Then the clock icon on the image turns into a spinning icon. From then on, each additional image starts up right when the previous image finishes, unless there’s a processing error, in which case there’s a delay as PL recovers from the error. I counted the time only when the rotating icon is going and skipped any timing if a processing error occurred.

With just 3 images, it took multiple runs until they all succeeded without any processing errors. Counting from when the first spinning icon appeared, the total time for all 3 was 24.86 or about 8.5 seconds per. My images are 33 MP, like yours. Mine are also compressed RAW images (Canon, not Sony).

If the Nvidia takes 10 seconds per image, either it’s very slow or Deep Prime XD is. Are you sure you timed this right? The setup time varies, but is around 8 seconds and would have added about 2.75 seconds per image, so even including it I get 11.25 seconds per image. The 3070 should be way faster than my card.

Thanks! I tried the settings with 22.11.2. Things actually ran a little faster (almost the same, really), but I still had processing errors. I’ll have to revert to 22.10.3 and try it again.

DeepPRIME XD takes more time to process than DeepPRIME. Several people have posted processing times. My latest measurements were these

Processing times on iMac 2019 (Radeon Pro 580X 8 GB, 3.6 GHz 8-Core Intel Core i9) running BigSur from an external Samsung T5 SSD (OS, SW and images on the same drive) and got the following times for HD, Prime, DeepPrime and DeepPrimeXD respectively:

- 33 seconds

- 144 seconds

- 75 seconds

- 385 seconds

DPL was set to process 3 images in parallel.

As for results, I’m not sure if processing times for XD justify the marginally better detail compared to what I get with DeepPrime. Also, Prime seems fall behind. In context of the hardware used, I’d just use HD or DeepPrime and forget the other two.

Processing on the M1 MacBook Air gave me the following times:

- 36 seconds

- 200 seconds

- 43 seconds

- 351 seconds

Note: All times for processing 10 files.

@freixas as far as I know the graphics card is only being used for noise reduction DP or DP XD. In my spreadsheet above I have included timing for exports with no noise reduction selected.

I didn’t do that just to be perverse but because much of the work is being done by the processor, I now know that my Son has a monster Ryzen 3950X, my Grandson a Ryzen 5 3600X and I have an even older i7 4790K with pass mark scores of i7 3970K (8058 2463), Ryzen 3600X (17795 2657) and Ryzen 3950X (39012 2710).

So for me to know whether my new RTX 3060 is actually faster than my Grandson’s RTX 2060 I would need the same processor installed or need the figures that I have above for my system to determine how much time is using CPU (and is that single or multi-threading, almost certainly some and some) for each of the three systems.

So if a batch of images take 76 seconds but the same batch takes 63 seconds without DP or DP XD then we appear to have 13 seconds of GPU for noise reduction using crude arithmetic and the rest is being handled by the CPU, whether using the CPU in single thread mode, which seems unlikely given that all the CPUs have similar single thread performance .

Therefore it is sensible to surmise that there is at least some multi-thread performance being used and there the three systems are very different, with mine left trailing in the dust!

So buying a powerful GPU makes sense to achieve some reduction in time (the part of the process that can be attributed to Noise reduction), but if partnered with a slow CPU then the gains are not going to seem as great as they might be and the law of diminishing returns sets in so that a powerful GPU makes a smaller increment in overall performance for the money and it is “only” attacking part of the problem, or so I believe.

This is also the reason that just comparing image processing times for the same or comparable cards is not completely accurate when trying to assess one GPU with another, except when they are on comparable processing hardware.

In my case both of my machines are i7 4790K but not all of the hardware of the two machines is identical and my charts also show differences between processing times possibly related to the media used to hold the images and/or used to hold the outputs (and the infrastructure between that media and the rest of the machine) and the number of simultaneous threads attempted and “unknown factors” that mean that I am frequently unable to get identical figures for the “same” test.

PS But if you don’t have the right graphics card and want noise reduction then you have ‘Prime’ and the noise reduction element will be well and truly longer!

For the 5 Nikon images it took 156 seconds to process all 5 images (from the USB 3 SATA SSD) and the power consumption peaked at about 200 watts.

With DP XD the graphics card was in use and the export took 57 seconds and the power spiked to over 300 watts at times!

With no noise reduction the processing took 28 seconds on one run, then 25 seconds on a second run and power went as high as 190 watts.

I would guess that DP XD processing took about 57-28 = 29 seconds (what the split is between GPU and CPU during that “GPU” denoising period I do not know), Prime took 156-28 = 128 seconds of CPU and just converting the RAW to JPG and applying the edits took 28 seconds of CPU.

To check again, I did it by exporting 20 raws to 100% JPG, and simply use whatever time DxO PL6 has reported.

Spec:

Nvidia RTX 3070 (no overclock)

AMD 5600

3200C16 DDR4 32GB

With Sony A7IV lossless compressed RAW which are 33MP (size varies a bit),

applying DxO standard and manually select the different denoise option,

the total time to export 20 photos:

DPXD: 2m47s → 8.35s each photo

DP: 1m21s → 4.05s each photo

I think it is better to count whatever setup time there is, as it’s part of time you have to spend waiting. But we can compare it more accurately by just considering a batch of 20 images and see what the total takes for example.

Thanks for checking Deep Prime XD does seem slow.

The setup time is important but it skews the results by how many images you have. Also, I found as I repeated my export that the setup time varied from 0 to 8 seconds or so. The more images, the less significant the setup time is. In my case, I continue to get a lot of failures, so I can’t really benchmark more than 2 or 3 image (failures also skew the results by either terminating early or adding some reset time).

Because of my lack of luck with my card, I do one photo at a time, not the recommended 2.

There are a lot of things that affect a benchmark. What I’ve been most paying attention to are the people who just upgraded their card and then mentioned their performance improvement. Presumably, in those cases, the video card change was the only relevant factor.

I get “Page Not Found”.

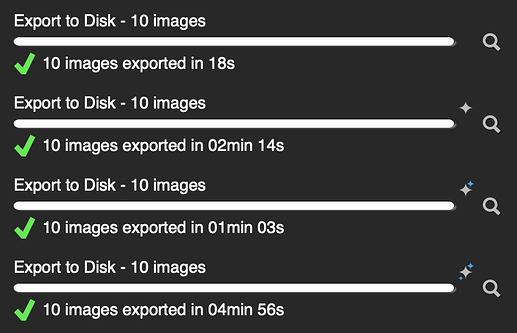

Corrected the entry in the post above…and did another test with 10 .CR2 files of 336MB total.

Settings: No Correction, processing 4 files in parallel as 16 bit TIFF and unchanged colour profile. No correction (no denoising too) processing took 11 seconds for the 10 files on a 2019 iMac with Radeon Pro 580X 8 GB.

Times (from top to bottom) with HiQ, Prime, DeepPRIME and DeepPRIME XD with default settings were as follows:

As we can see, processing times cannot be compared unless we know exactly what was tested and how. (Did you note the badges at the end of the progress bar? They show how denoising was set.)

This looks like a problem I had. Try -

Edit>Preferences>Performance >DeepPrime Acceleration

Select “Use CPU only”

Don’t forget to Restart!

Hope this helps.

That’s one way to solve it, but changing from a GPU (even an old one as the RX 580) to CPU only will drop performance a lot. We’re talking processing times with DeepPrime XD that increase from 30 seconds per photo to 6 minutes per photo.

So changing to CPU only isn’t really a solution to this problem. And with the settings and tips I (and others) mentioned earlier in this topic, you can get stable results with the RX 580 as well.

I can assure you that if you are suffering from the problem that I had, and it appears that the OP is having as well, CPU only is a very good solution indeed! I didn’t notice any drop in speed but I’m not using Deep Prime XD.

Just as I can assure you that a RX 480 or RX 580 can be stable in PL too, if you take my advice of changing some settings and be careful which driver version you’re using.

Which GPU are/were you using?

If you don’t use DeepPrime or DeepPrime XD, you’re not using your GPU (that much). HQ NR and Prime depend on the CPU. So in that cases you won’t notice much difference. But the difference between processing using DP or DP XD using CPU only vs. GPU is very big as CPU takes about 10x as much time to process the same image.

Recently I processed a batch of 110 images, most of them with either DeepPrime or DeepPrime XD. In that case you’re talking about 50 to 60 minutes total processing time using a RX 480 GPU vs. almost 11 hours using my Intel i5 6400 CPU only. WIth that kind of CPU only processing time, I would even rather invest in a new GPU than using CPU only.