Thank you for bringing the former threads back in.

i remember again: it started by asking which colorspace is used to convert the rawfiledata into.

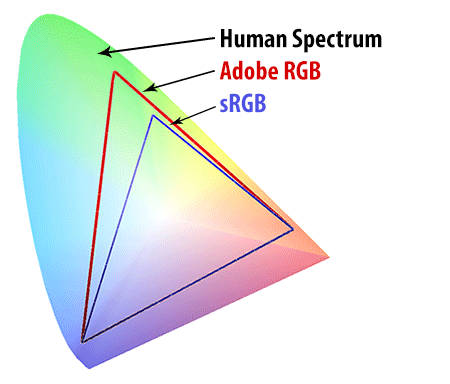

There maximum colorspace in photolab is AdobeRGB colorspace and some of you liked to have prophoto for printing.

About the Histogram: (to see if i fully understand)

1 the Histogram shown in camera is the one they process by your camera rawfile to jpeg setting as in adoberRGB or sRGB internal processor? It’s not the LRGB interpretation of the native colorspace of the sensor.

2 DxO has LRGB and clipping black (moon) clipping highlight (sun) and it shows the RGB numbers 0-255 per channel when you hoover over the image but you don’t see a crosshair in the histogram where this point is in the image and correspondences in the histogram.(Somehow i would like that.)

3 i don’t know if floating histogrampalette/tool is possible to enlarge the frame/window. (i know i can in an other application) This would help to fine tune the blackpoint and whitepoint of the images and to see which channel is oversaturated (colored spikes clipped on the top of the window.)

4 softproofing would need a histogram “in” and histogram “out” so you can see both colorspaces you have selected.

Resolving flat-blacks (nativesensor readout 0-0-0) can’t be done i believe there isn’t any detail in to resolve but i don’t know if this 0-0-0 is also the adobe or sRGB blackpoint 0-0-0 or is a smaller colorspace floating inside the bigger colorspace. and is “black” not 0-0-0 but 5-5-5 or something?

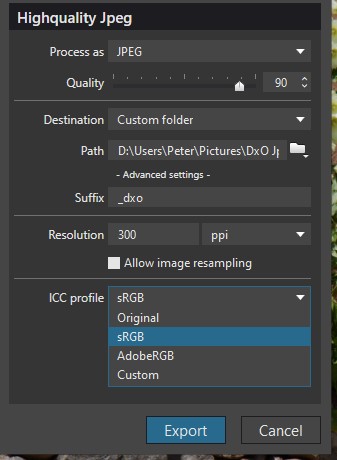

The reason i ask is if i use a slider in selective tone : highlight, midtones, shadows, blacks and i stretch the histogram i turn dark shadow to blackpoint and highlights towards “whitepoint” which contains color data and detail until it hits 0-0-0 and 255-255-255 (RGB) borders of colorspace defined by you (sRGB for instance.)

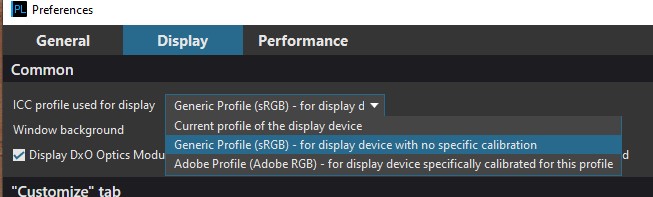

But workspace is AdobeRGB so working in preview setting sRGB i could have some colors outside the colorspace sRGB, so if i am use exposure compensation and the selective tone sliders to turn down highlights and bright colors, do i “compress” the range of color “values/numbers” inside my sRGB from the AdobeRGB colorspace only or also all available data in the imported camera colorspace numbered in the rawfile? So can i retrieve all available data the sensor has captured and coded in the rawfile by using the sliders? i hope it works that way.

Because then a good working , with adjustable “threshold” clipping detection system can be helpfull to maximize the image tonecurve balance by compressing the value’s of the RGB which representing the hue making the brightness less bright so it fits inside the colorspace i choose in preference like sRGB. (i know i can’t see colors my viewdevice can’t show. out of gamut is out of gamut.)

My Huelight DCP’s for my G80 are more stretched to the borders then the general camera rendering of DxO, getting more out of the sensors data but clipping faster apparently in my sRGB workspace (preview)

Disregard the third rendition posted here – you can download the file and “Assign” the Adobe RGB profile to it in Photoshop or Affinity Photo.

Disregard the third rendition posted here – you can download the file and “Assign” the Adobe RGB profile to it in Photoshop or Affinity Photo.