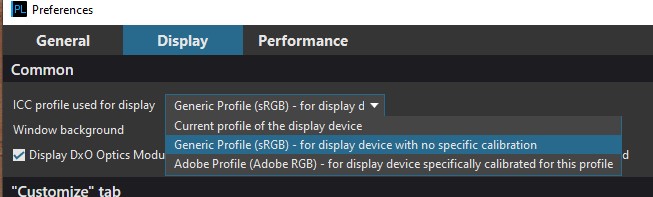

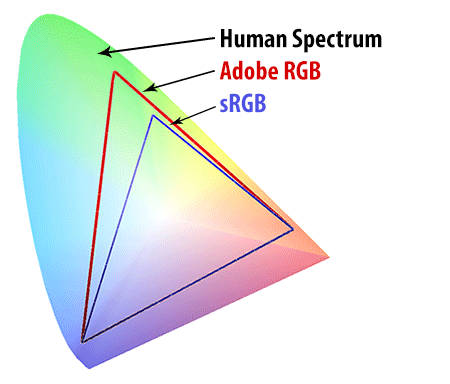

The clipping indicators in PhotoLab, esp. the moon, are a bit misleading, because the latter combines black point clipping info with display out-of-gamut warnings. It would really be useful to have a separate OOG warning for the display and for the working colour space – these two might be more or less similar if you have a wide gamut display very close to Adobe RGB, but what about the users who don’t have such displays?

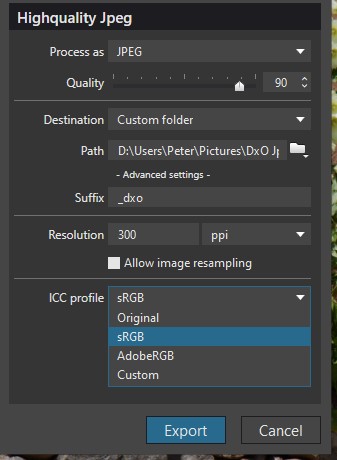

When you have a display close to sRGB, and you edit your image paying attention to the Moon indicator, you effectively do sRGB soft-proofing for sRGB output. But what if you want to export the file in Adobe RGB, e.g. for printing or for people with wide gamut displays? Your edit won’t be optimized for those outputs because your display is incapable of showing you colours outside of sRGB, and you have no working colour space out-of-gamut warnings (in PhotoLab’s case it’s out-of- Adobe RGB-gamut warning) – you will throw away important colours that PhotoLab is capable of rendering, despite its limiting working colour space.

And one last point – sometimes it’s not worth it to worry about slight out-of-gamut issues because the perceptual rendering intent applied during export should take care of this. Without proper soft-proofing one needs to learn to trust it will do just fine, without you having to resort to the Tone Curve tricks. Currently in PhotoLab you have to do hard-proofing, i.e. export and evaluate if there are no colour rendering issues, or create variants / virtual copies for each kind of output (not really ideal).

Disregard the third rendition posted here – you can download the file and “Assign” the Adobe RGB profile to it in Photoshop or Affinity Photo.

Disregard the third rendition posted here – you can download the file and “Assign” the Adobe RGB profile to it in Photoshop or Affinity Photo.