@roman I am sorry that you have had a rather chequered past with hard drives! I run partially the way that you do and partially the way that I described. My Main machine is a small tower system (built by me as have been all my machines since my Amstrad PC(!)) and it is one of 3 such machines + a laptop (my wife now has my oldest machine, still an i7 but “ancient”). Two of my machines are i7-4790K’s and the other a very old water cooled I7-2700K which is hardly ever switched on (which I must do today and update the backups to it).

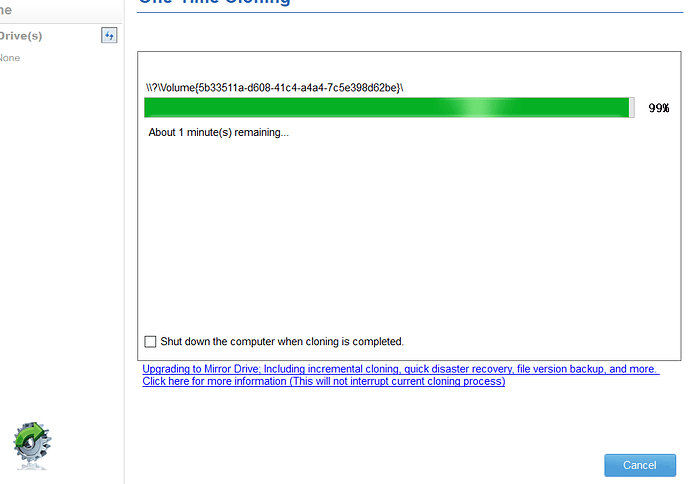

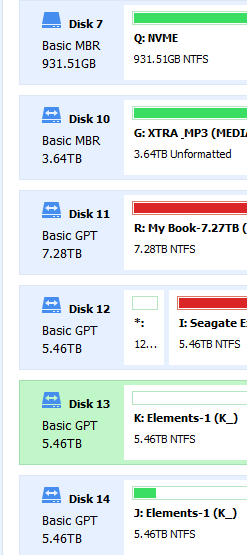

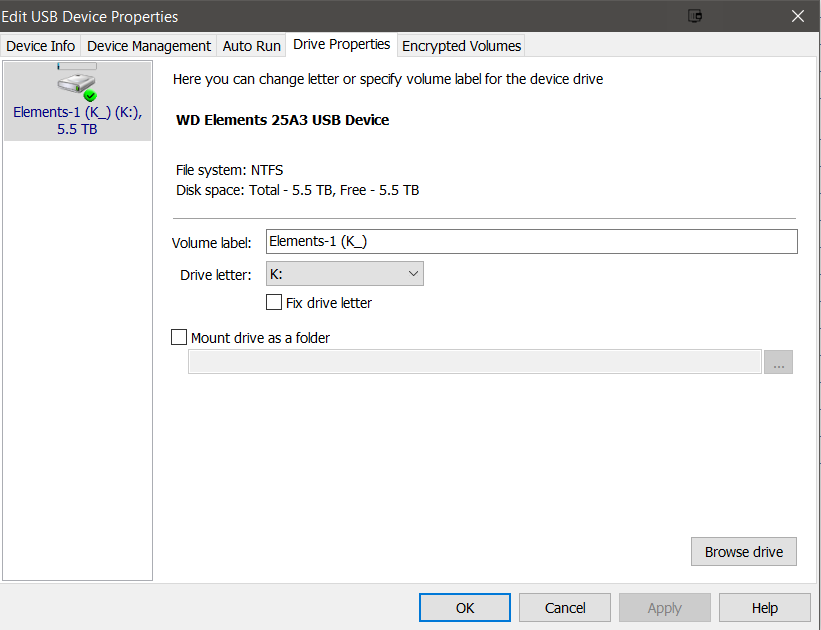

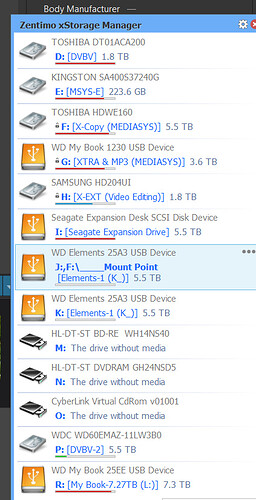

All machines boot from a SATA SSD the C: drive and have another drive for yet more software, when needed, the E: drive, which is also a SATA SSD. The C: and E: (where necessary) drive(s) are cloned fairly regularly to protect the installation and then all the other drives are various combinations of 6TB, 4TB and the odd 2TB, many were bought as USB drives to be dismantled, others were replaced with larger USB drives and then dismantled. All the HDDs are backed up using copying not cloning.

The NAS is not configured as a RAID just as a JBOD with 3TB and 2TB drives and holds copies of personal files, the software library (installation files and keys) and a 1920 x 1440 library of all the photos taken and an additional copy “sorted” by the various gardens we have visited since having a digital camera, i.e. 2004 onwards. That library was also held on the main machines but space is getting tight so it now sits on the NAS, the USB 3 backup drive and a portable USB 3 drive, only 4 copies instead of 7! Holding the images as 1920 x 1440 gives a quick performance on phones and tablets over wi-fi and an acceptable quality on larger screens and is a much more “portable” entity.

All the drives are fairly ancient but I have been fortunate enough not to have a virus damage the files and drives do start to fail with age but most of the old SSDs are still working and I lost a NAS drive about a year ago.

Depending on how the corruption occurred a clone will produce an exact copy of corrupted data unless that corruption is actually damage to the disk. The advantage of a clone is that it reads either the whole disk or the in-use sectors in order to read the original data, “exercising” either all the disk or a large selection at the same time.

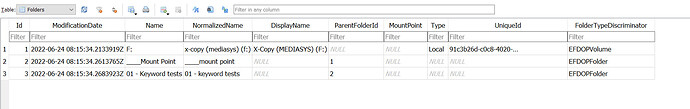

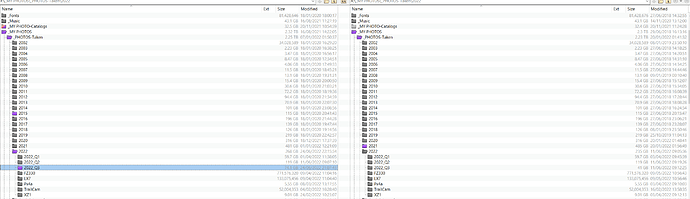

I use Beyond Compare not just because I can backup with it but because It helps me track what is happening with the files!

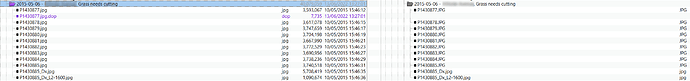

For example why do I have 2015 flagged up? 2022_Q3 is obvious, i.e. I have taken photos that are not yet backed up!

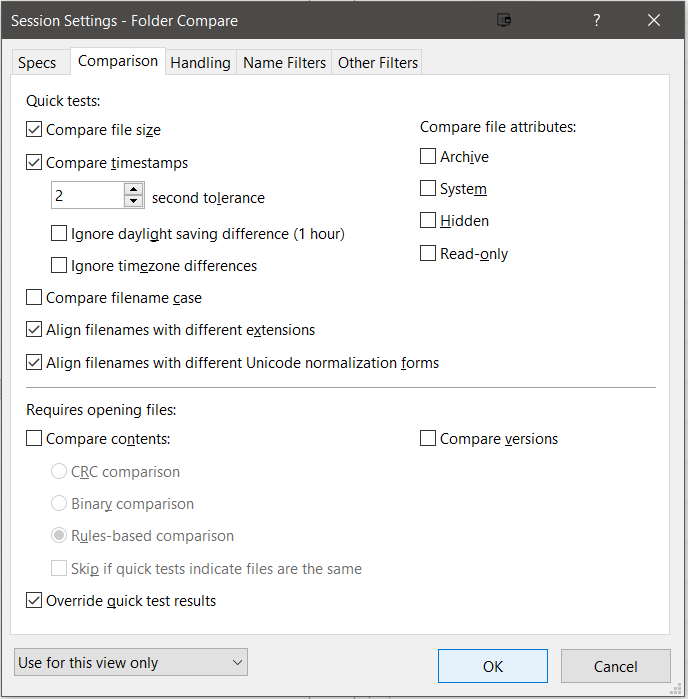

Beyond Compare does have various options available for the comparison! I generally only compare the files names looking for Missing or misnamed files between the copies, i.e.

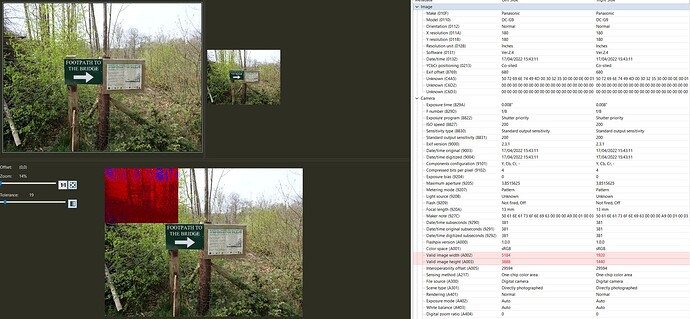

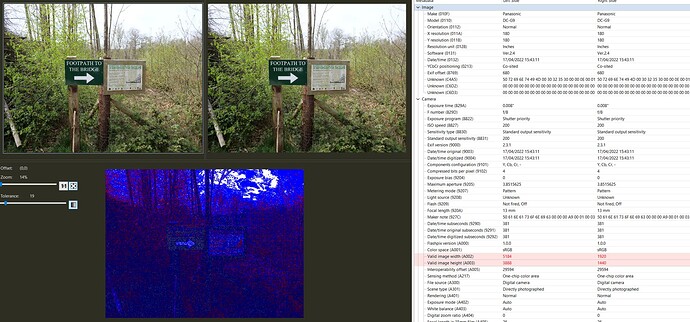

and I can the use it to compare images and metadata (here comparing an original JPG with a 1920 x 1440 “copy”)

and I do not get any commission from Beyond Compare for mentioning it here, but I “need” the ability to look into my disk files and investigate anomalies, contents etc…

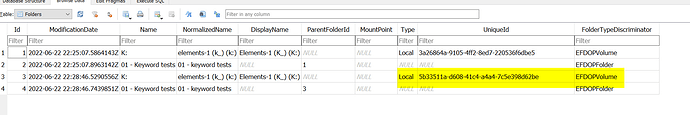

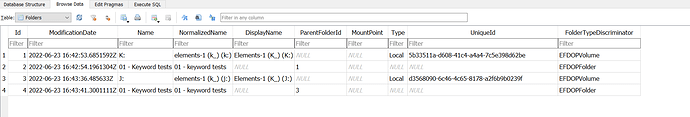

The 2015 “anomaly” was obviously me using DxPL to "experiment with a photo which left a DOP, which now needs to be deleted or copied to my backup(s)!