With the help of several brilliant people in the DxO PhotoLab file, I’ve been following the advice and can now create better high dynamic range photos than eve before. I thought I was all set, until another forum friend wrote me to suggest I check my finished file with the tool RawDigger. Yikes! I learned a lot more about my file than ever before, and how Raw Digger can help me improve.

I hope two things happen - first, that my forum friends, especially @Joanna read this and try it,

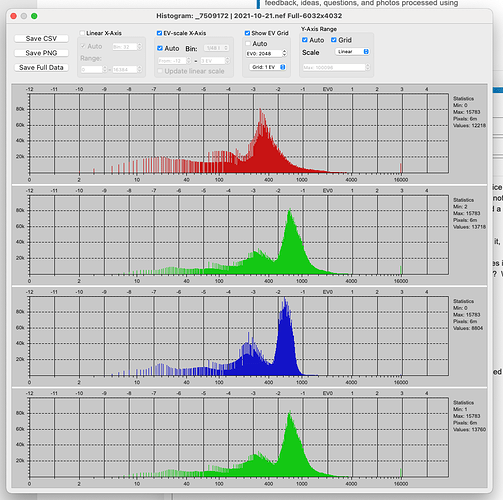

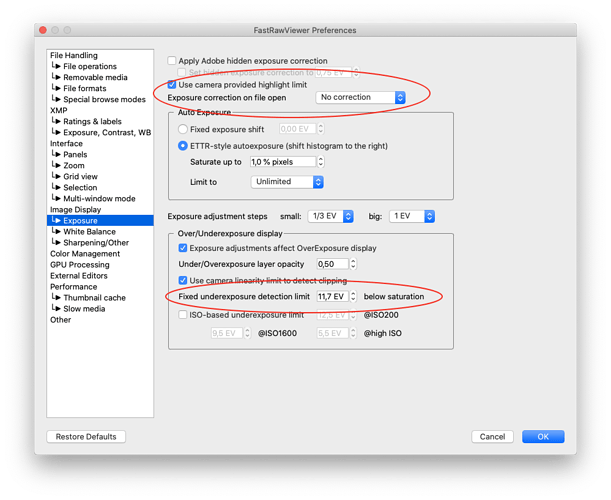

…and second, that the folks who design PhotoLab consider adding some of these capabilities into the next version of PhotoLab. Would it be good to know how many pixels have blown highlights? Would it be good to have a histogram window like the one shown below available when wanted?

Here’s the training video I just watched - there are many more:

Raw Digger

Here’s a link to the software:

https://www.rawdigger.com/download

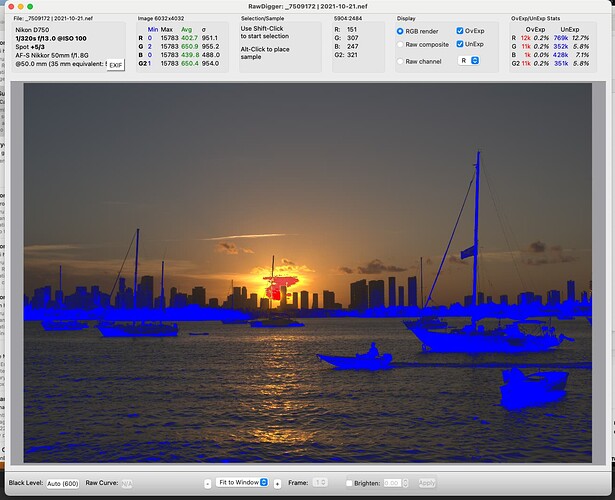

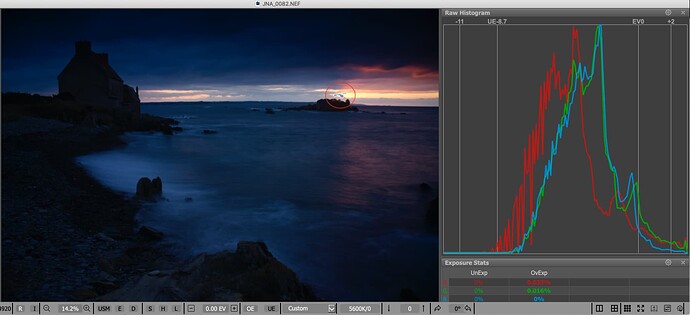

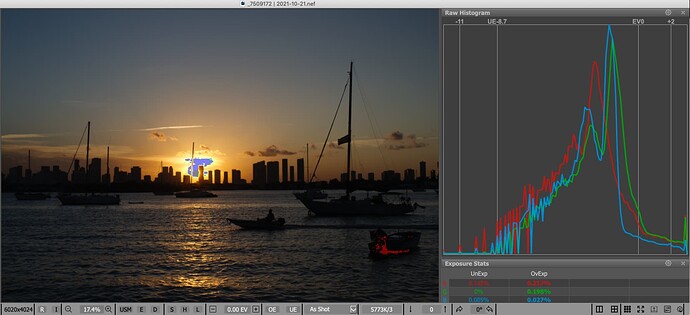

Here’s a photo I edited in PL5, with lots of help for how to capture it, after checking the finished image

All of this would be in addition to the tools we already have in PL5.

I think PL5 already does most of this, and as we change the settings, the information updates. I’m not sure how much we need all the additional information. @Joanna?