Could you take a single example and write down the precise steps you take from taking the picture, processing it, keywording it, etc, through to exporting the JPEG?

Joanna I took one and it exported. The fix must have been done as I went back and tried the examples I sent support to which they which they said

“Hello,

Our team identified your issue.

We will delivered a fix for one of our future update, in March for the latest.

Thank you for your patience.

Regards,

Fernando - DxO Labs Support Team 1”

Isn’t it nice when they don’t tell you something is fixed, so I can go back to processing everything in Photo Supreme rather than just the RAW. Been back and tried a number of others that couldn’t export befor and pleased to say they now export OK.

If any use, what I do is load imiges into folder, run Photo Supreme add keywords. Backup onto NAS and open and edit in PL. I export to a test folder to check results, go back change where needed and remove unwanted ones. Rerun Photo Supreme to remove deleted photoes, if needed. Export to flicker and rerun backup unto NAS to remove unneeded ones and add dops and backup to desk HDD and laptop (so its a mirrer of PC when in use away).

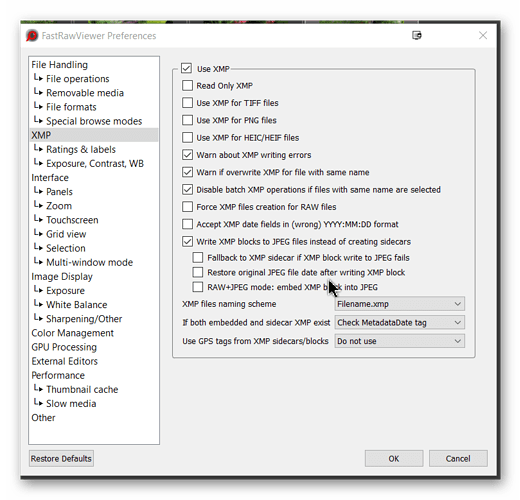

Joanna (@Joanna) you can use FRV for JPEGs but you need to set the options to not create sidecar files as follows:-

Now there are two potential problems

-

The differences between Win10 and MAC

-

I am using a Beta version of FRV to include changes to avoid going back to the ‘File’ ‘Reload’ menu to Reload/Refresh a photo (e.g. the Ratings) it is now available on the Thumbnail (if you ask you may get if you don’t you never will, so I asked) etc. Unfortunately I had too many variations of FRV that I deleted all but this Beta version so I do not know what is available as standard, oops!!

The reason for my test group of 3 JPGs and 1 or 2 RAWS is to cover embedded xmp (JPG), sidecar xmp (RW2) and with PM I can force embedded xmp with RAW (RW2). The 2 RAWS are RW2 and ORF, RW2 sets ratings to 0 in camera and ORF does not.

Update 1:

Looking at the options more fully there is one to specify how to handle when both external and embedded are present. If only all software was as customisable!!

I’d call it a no-go…and pull the update until it’s fixed.

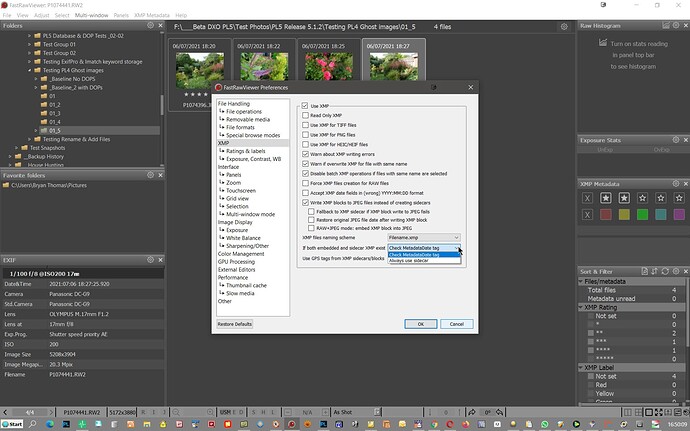

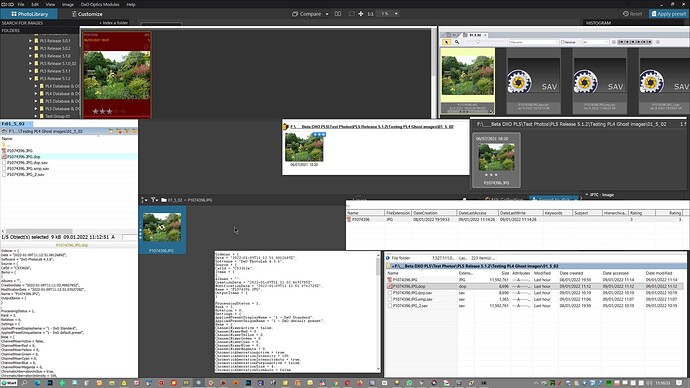

@Joanna, @jch2103, @John7, @platypus just been doing part of the Joanna tests but added an element where I went along my images using each piece of software in turn to see what happened! I have already experienced a problem with the beta version of FRV and that reared its head twice and IMatch got stuck on two occasions while attempting to update the RAW (I believe attempting to update the sidecar) but I could not clear the error by getting IMatch to re-scan given that it was IMatch doing the update in the first place!!

The problem only cleared when another program did the updates!!

Two error reports to write and the issue will be put down to interference between the programs (they are all running at the same time and actually if well written that should not cause a problem), i.e. a program not being able to secure a lock. While that may be true the standard procedure for such a problem is to wait and try again and again for a timeout limit with an increasing wait time between attempts.

Who is causing the write delays in the case of IMatch and Read delays in the case of FVR (if such errors are actually occurring) I am not sure but PL5 worked fine and it detects the changes “immediately”, PM detects when forced to refresh but also detects automatically but over a longer time period than PL5. It is possible that PL5 auto detection is causing problems but it should only be reading when IMatch decides it has a write error!!

I will repeat the IMatch test but dropping different programs out of the mix at some point.

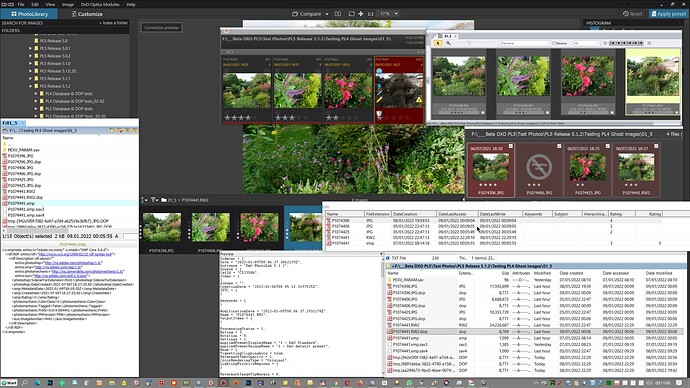

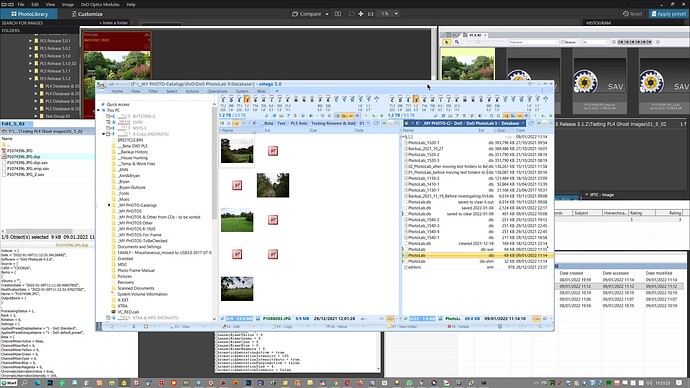

@Joanna @John7 @platypus @jch2103 What a mess I got myself into trying to test Joanna’s PL4 to PL5 issue because I tried using my original 4 photos which were already known to PL5! I managed to hang PL5 3 times, discovered why I have never stuck with any DAMn DAM when deleted entries are preserved by IMatch thereby cluttering up my “nice” display.

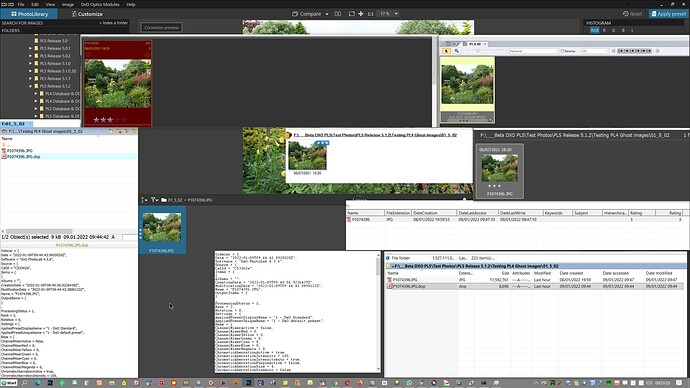

The display has two file managers showing the DOP, they were set up for the RAW testing and one was to monitor the DOP while the other monitored the xmp sidecar.

PL4 to PL5 migration via DOP works on Win10:-

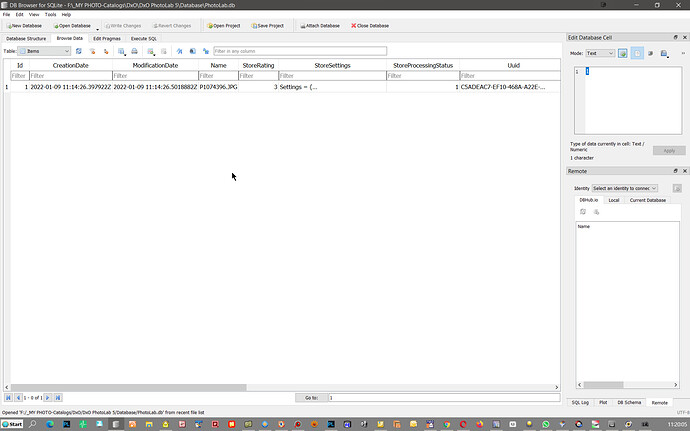

I followed Joanna’s procedure from PL4.3.6.32 Ghosting Ratings for Same image in different directories (Win10) - #21 by Joanna and it worked as my other tests have indicated Lost all ratings in PhotoLab Elite 5.1.0 ??? - #18 by BHAYT where when moving from PL4 to PL5 the DOP was taken in all cases as the starting point for PL5, when present. Where in Joanna’s tests PL5 takes the rating as 5 from the photo in my test it took the ratings as 3 from the DOP! Is this a WIN10/MAC difference or pollution in my database so delete DB and test again!

For some bizarre reason PL5 wants to make my backup for PL5db in the default location for the PL4 database (something I did or didn’t do!?). I messed up the tests and managed to hang PL5 with an essentially empty database on a 1 photo folder and have lost the will to …

I just repeated the test and PL5 took the value from the PL4 DOP, changed the photo setting Rating=3 and HUNG, HUNG, HUNG yet again @sgospodarenko

@BHAYT, do you still have a database that has not been spoilt by your tests?

If you do or think you do, you can restore everything based on that reference.

Depending on what version of DPL this database is coming from, the restore is more or less simple along the following steps:

- Open the “reference” version of DPL (the one with the unspoilt database)

- Export settings files (.dop sidecars) for all spoilt image files.

- Back up the database, then quit DPL

- Open DPL5, point it at an empty folder

- Restore the database from DPL (reference) - DPL can restore DBs from earlier versions

- Quit DPL5

That’s it, you should now be back with everything okay again…but as with every restore, things you did after the last “correct” use and backup will be lost.

So far, DPL has never destroyed any user data with new releases. Nevertheless - and based on recent experience - it’s important to back up the database before updating. If I’d use DPL as my main photo application, I’d regularly backup the database, as I backup Lightroom’s catalog.

I also hope that DxO will add database management features really soon.

@platypus Thank you for the info. I have already done that successfully and also imported a PL4 database straight into PL5.

As a matter of some urgency we need:

-

Add New database command. Whether there is any notion of co-existence in the implementation is up to DxO. I have read numerous posts elsewhere about 1 Lightroom Catalog versus many and well understand the benefits and risks associated. The main disadvantage is the lack of a spanning search, i.e. you can only search what is in the database you have open rather than what is in all databases. Welcome the DAM!!

-

Add an expunge photo(s) command, i.e. delete from the database but not disk. The bulk of the logic to keep indexes etc. in line is already there in PL5 but (just) stop short of deleting anything on disk BUT add an option to delete (or not) the DOP at the same time. Doing so risks losing all edits from both the database and the DOP but it is a useful option in a very tight corner and the user can make presets from the edit settings to re-apply later.

Next step options:-

- Allow deletion of directories.

- Allow Expunge of directories (from database but not from disk). No option to remove DOPs necessary (I believe).

Not entirely true particularly if you consider why I started this topic in the first place, the PL4 ghosting can have a knock on impact of the Ratings in PL5 (if Sync option is on)

We both mean the same thing: Before what we see now, DPL played nicely with assets. DPL5 updates left that track though.

Next step options:-

- Allow deletion of directories.

- Allow Expunge of directories (from database but not from disk). No option to remove DOPs necessary (I believe).

A long time ago, I decided to never rely on the database, only DOP files, for recording image edits. Thus, I can regularly delete the database and still keep all my editing data. It means I can close PL5, delete the database, rearrange my files and folders just as I want them and then restart PL5, knowing that it will pick everything up from the DOP files and rebuild the database.

The problems with that approach include if you want to manage projects or want to keep the change history. And, with PL4, when keywords and ratings were added to the database exclusively.

Now that we are meant to have something resembling a DAM, that metadata has to be stored somewhere and DxO decided to store it in both the DOP files and the database but only optionally in XMP sidecars for RAW files.

I had a very interesting offline chat with @platypus about this mess we are currently in and we currently differ over the solution, but here is my thinking…

Personally, I wouldn’t rely on the database for anything and so choose to automatically create DOP files for the purpose of image editing data. But not metadata.

I would also rather automatically use XMP files for metadata, because it should then be in a format that any other app can read. What is more, just as PL5 can automatically read DOP files to recreate the image editing data in the database, it also automatically reads XMP files to recreate the metadata in the database.

If you look carefully at the test I have done, there is absolutely no need to keep metadata in either DOP files or the database. PL5 never reads it back from either unless either a DOP or XMP file can’t be found.

That way, I have never lost either editing or metadata and my database has never caused me problems by corrupting.

I had a very interesting offline chat with @platypus about this mess we are currently in and we currently differ over the solution, but here is my thinking…

…I’m not really concerned about DxO using a database or not, writing keywords to .dop files etc.

- Whatever DxO will be deciding to do, they should

a) stick to the decision and

b) completely implement whatever was decided

Imo, DPL5 has added a few first steps on the road to a more comprehensive product. But: Lifting both (all) legs at the same moment to make a step will lead to landing on the belly.

I’d be well surprised if DxO abandoned DPL’s database. Some kind of memory is necessary unless an editing session should end, whenever DPL quits unexpectedly or by user action.

If DPL should be meant to improve its asset management aspects, database maintenance should be implemented first, as a stable foundation for all that builds up from the database.

Until we get there, sensible handling of assets is even more imperative. Regular backups can help as well as @Joanna’s ways do - within the scope of one’s needs and one’s tool’s capabilities.

Not taking precautions is risky. Still, many learn by burning their fingers first.

@platypus @Joanna I will review your comments and chip in my “five pennath” when I have finished re-commissioning the central heating system. The blockage was sitting right next to the boiler in the shape of the TF1 cleaner which had not been looked after regularly (by me) and became full of … until it choked!!

The breakthrough came just before lunch when I tapped the cleaner unit with the handle of a large screwdriver hard and deliberately because it suddenly seemed the most obvious candidate for a blockage, so obvious I should have found it last Monday and the return pipe started to warm up.

First priority (especially in winter) is fixing your furnace! In my climate, it doesn’t take long before freezing water pipes become a serious issue (Frozen Pipes Are New Concern At Homes Spared By Marshall Fire In Boulder County – CBS Denver - Fortunately, most of these folks received emergency heaters to stave off the problem.)

One note about your testing: If you have multiple programs open at the same time that can edit metadata, conflicts and data corruption are almost inevitable. Metadata changes may be committed at different times based on the update algorithms the programs use. For example, IMatch stores metadata changes in its database, but they aren’t committed in the image until the file update is written. I believe Lightroom operates similarly. And as you’ve found, how and where PL5 read/writes metadata updates is currently somewhat of a mystery.

Ensuring that metadata changes made with one program are written to images before handling the metadata with a different program may avoid some testing problems.

@jch2103 fortunately the temperatures have not been too low and we are managing with convector heaters, fan heaters and an immersion heater. When I found the magnetite cleaner all gunged up and cleaned it I was hopeful that the problems were close to an end but the system is way too sluggish even with the brand new pump, so there is either an air lock or a sludge blockage or both!!

With respect to your comments about using multiple programs all at the same time I am afraid that I consider that as the test of good programming. There is no reason that any program cannot cope with such a situation if coded to be able to actually detect the situation and then takes remedial steps!

I decided to run each of the programs I was using to change either the JPG or RAW data through a test by setting the ratings for each photo in turn by each program in turn and watching for any fall out. Hence, in the case of IMatch I started with the first 3 JPGs and changed them one after another and then changed the RAW. The JPGs were fine but the RAW caused an upset and that seemed to continue regardless of the programs that I left running (PL5 was the first to be terminated)!

IMatch reported a ‘write’ error and to be honest that was a surprise because the updates from IMatch lag a bit behind the changes and I normally go round the programs that need to be refreshed after a set of changes so that the ‘Sticky Previews’ are all showing the correct related data (I don’t always get it right but…), i.e. the heavyweight programs are already showing the updates before I refresh the others!!

So in fact there is no classic deadlock situation at all because the updates are flowing from only one program at a time (PL5, then FRV, then PM, then IMatch). Testing the deadlock situation would be a nightmare and far more likely to happen by accident!

All are fairly well behaved, the ‘Decoding error’ in FRV I reported to the developer some time ago and it was attributed to a Read clash but I actually believe that is not the whole story and need to do some more investigating.

The IMatch error was shown as a write error and I could not find how to get IMatch to try again. The classic problems back in my day was a program reading data to put on a screen and then accepting the updates (transaction) from the user but discovering the data held on the database had changed, e.g. in a sales of stock situation.

For example if the original data was a stock level of 10 and the transaction was for 2 items sold but before the 2 items can be subtracted the record must be Locked for update and the stock level is actually 0. Lunatic programmers wouldn’t even store data that allowed such checks to be made and would take the stock level below 0. In some systems the database record actually has a version counter attached and that remains with the transaction throughout its life and is effectively a pass key.

One problem that I seem to remember encountering is where this situation appears to have occurred and the program reacts to it but actually the test was incorrect or the results were processed wrongly and the remedial action is itself the bug.

One concern I have with PL5 is that the PL4 DOPs work for me (but not Joanna) and take precedence over everything when encountered! But this misses the situation between the creation of the DOP and its import into PL5 where the embedded or sidecar data may have been changed! The tests I have been running show how well or not the various programs are at detecting the validity of a sidecar versus embedded xmp data when both are present.

PL5 should be able to detect DOP versus sidecar versus embedded xmp data in any and all situations including the migration from a PL4 DOP and I believe that it does not (albeit @Joanna’s tests do not show the “blind” acceptance of the PL4 DOP that my tests seem to)!!??

I will continue to run multiple programs concurrently but with so many programs it is impossible for me to create the deadlock situation unless a program is less well written! I am testing PL5 but in truth I am also testing the other software at the same time!!

One of the issues with using concurrent programs is concurrent file locks. When that happens, issues are unavoidable.

@jch2103 Agreed but there shouldn’t be any concurrency the way I am doing the tests unless some programs hang onto a file longer than they should, having detected a potential change of interest.

My “acid” test is how many programs seem to run into trouble and if there is the odd one then why has that happened and can I replace that the program in the “mix”.

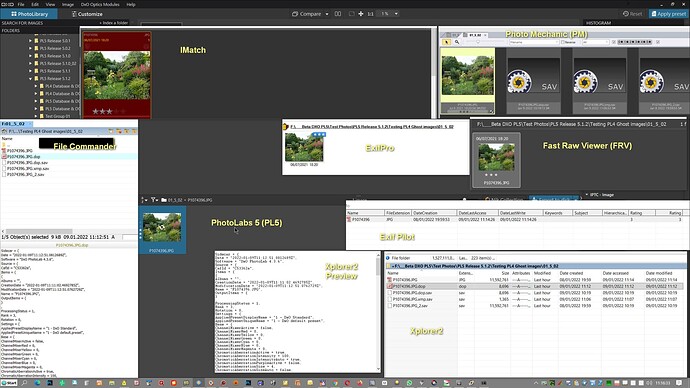

My current “mix” is PL5 under test, FRV to monitor and set RAW sidecar Ratings, PM to monitor and set RAW embedded Ratings and then I want at least one more program to act as a monitor but added IMatch as both a monitor and an “actor”. I am also using ExifPilot to check on metadata, Xplorer2 to monitor files and DOP contents and File Commander to monitor files and xmp sidecar contents and ExifPro is also there but only as a monitor.

3 monitors (screens) and one aging brain to control 8 programs, most of which need to be prodded (or a wait) to show the latest state, one reason why I love PL5 Sync it is truly a joy to work with (except for the occasional bug lurking beneath the surface!)

Hi Bryan

I think this thread is getting too convoluted because we are discussing too many variables at one time.

Might I suggest we nail down one situation at a time?

Let me start by going back to something you said in your initial post…

I set up a test with 4 identical directories each with 4 photos. The first had ratings assigned of 4, 3, 2, 1, the second all 0s, the third 1, 2, 3, 4 and the fourth all 0s

How and with what software exactly did you assign the ratings in the first place?

@Joanna I sort of agree, i.e. we have “deviated” into what PL5 could or should be with respect to DB versus DOP versus xmp and also into the risks of the testing that I am doing i.e. concurrency versus contention but that is not different to most posts!!

You are right because it was a long way back and I have forgotten! Looking at the snapshots I was using FRV and ExifPro (ExifPro principally for displaying multiple directories at the same time, it is also fine for setting RAW sidecar metadata but it is still “poison” to PL5 with JPGs, you can force PL5 to import the data set by ExifPro but PL5 will not/cannot then update with its own keywords!!?? ).

FRV is fine for setting JPGs but “only” sets RAW metadata in the sidecar so I added PM in later tests to be able to create the situation where an older sidecar is superseded by newer embedded data and vice versa.

Woah! There you go again, getting complicated

To start with, this topic was only about ratings and I would like, for the benefit of my own app as well, to know exactly what you were using just for setting the initial rating of an image (we’ll return to the nightmare of keywords later)

Am I right in assuming from what you have written:

- this has always been FRV?

- the rating was written directly to JPEGs and to XMP sidecars for RAWs?

As a small aside, I saw in one of your screenshots a reference to the RatingPercent tag as well as the Rating tag. What created that? Windows?

@Joanna I have attached an annotated snapshot to help identify the “culprits” and will try to stick to this layout where possible. If it is in this post then it is a preview of one of the saved xmp sidecar files displayed by Xplorer 2(squared) preview. With both Xplorer2 and File Commander the highlighted file should be the one shown in the preview.

If it is in another topic then it will come from Beyond Compare (BC) which uses a combination of add-ons including ExifTool.

Am I right in assuming from what you have written:

- this has always been FRV?

- the rating was written directly to JPEGs and to XMP sidecars for RAWs?

In the early tests in this topic the answer is yes to both, FRV was used to set embedded in JPGs and in the sidecar for RAW. In later tests PM was added to the mix and used interchangeably with FRV to set embedded in JPGs but in the case of PM also embedded in RAW. In no situation did I set sidecar files for JPGs intentionally.

PS in the ExifPilot display the first Rating is the one you said should be set and the second is the other that PL5 is setting. I believe that none of the software I am using touches the second Rating field so the value remains at whatever PL5 set the last time it made an update.