Please don’t take the following as in any way insulting

I find it fascinating that “young” photographers now look to AI and other “automations” to “correct” their images, rather than previsualising and calculating how to obtain the best result in the camera.

I have been involved in photography for over 50 years and teach at our local photo club. The first thing we try and cover is how to relinquish automatic mode

We even have some people who believe that the camera knows best, only ever shoot jpegs, and feel that what the camera gives you is the best you can get

I also get criticism for referring to how I used to do things with film, as if this is totally irrelevant to digital, but totally missing the point that the laws of physics for light have never changed and that a sensor is only a reusable sheet of colour transparency film.

They don’t seem to realise that, just like film, sensors have their limitations in terms of dynamic range, noise/grain and colour rendering. These are all things that we “oldies” learnt about at great expense (because film costs and has to be developed before you can see the result).

Last year, I made an image, which, I believe, no amount of automation would be able to correct :

To start with, I used an independent spot meter to measure the brightest point in the sky and the darkest point on the land, where I wanted to see detail. I know that my camera (Nikon D810) can theoretically cope with 14 stops of dynamic range but, after testing it in real life, I prefer to limit it to 13 stops.

Since the readings indicated a dynamic range of around 15 stops, I realised that I would have to use graduated filters to reduce the brightest areas; so I placed a graduated 2 stop filter over the right side of the top of the image, from the horizon at the edge to about ⅓ of the way from the left, on the top of the image. I then placed another 1 stop filter over the sky in the top left of the image to further equalise the contrast.

I have also determined that, in order to maximise the dynamic range that the sensor can cope with, I need to take a spot reading from the brightest area and open up by two stops (ETTR)

Only then can I be confident that the image in the resulting file can be manipulated without resulting in either blown highlights or blocked shadows. We used to do the same kind of thing with B&W film using something known as the Zone System, over-exposing and under-developing to compress a wide dynamic range into the more limited range of silver bromide printing paper.

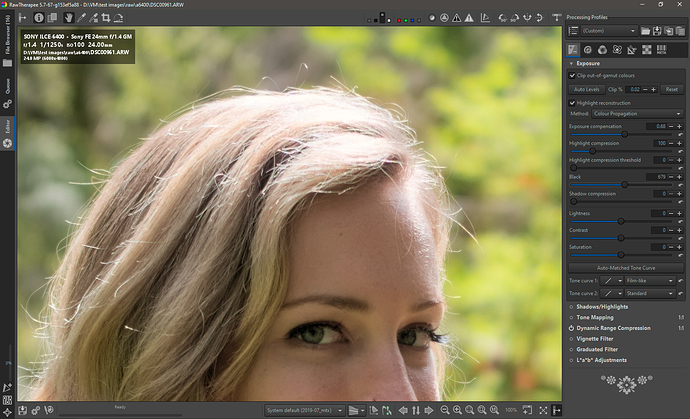

Of course, the jpeg preview image on the back of the camera looks horrendous :

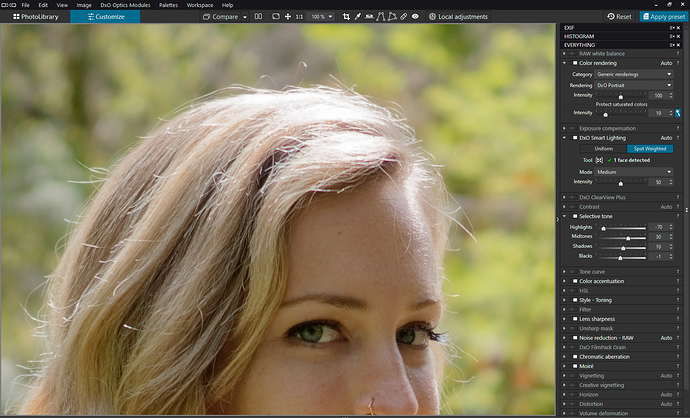

… but, just as with film, I can be confident that, after working on it in DxO, I am assured of a quality image.

Now, the problem remains, how do we transfer all that knowledge and experience into a “one-click” automatic tool?