I don’t believe you’ll get the results you want. Raising ISO doesn’t affect make the sensor more sensitive.

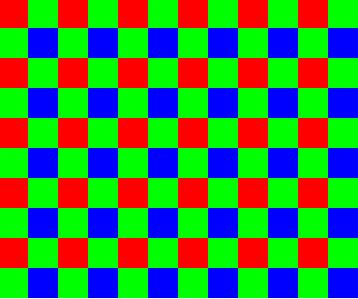

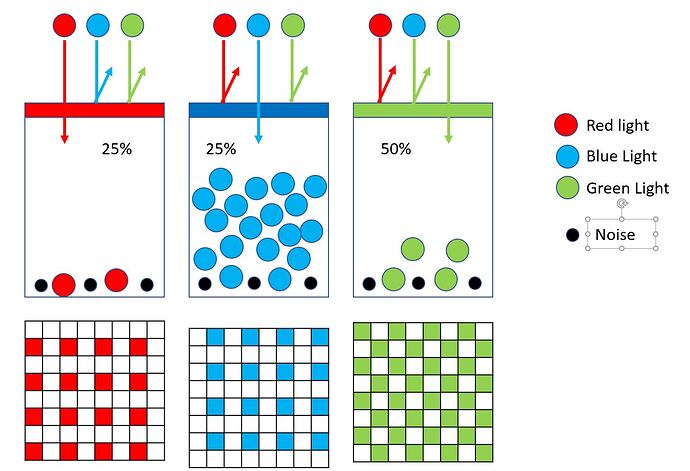

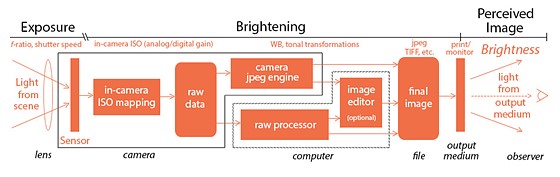

Let’s say you take a well-exposed image at base ISO. The amount of light reaching the pixels is such that the brightest light sources just about fill the pixels’ wells. No ISO boost occurs–the voltages go directly to the ADC (analog-to-digital converter), which turns them into 12 or 14 bit numbers.

Now you bump up the ISO by one stop. To compensate, you drop the shutter speed or narrow the aperture by one stop. The highlights only fill the wells to about half their max. The ISO gain then spreads out the voltages so that a half-full well reads the same as the full well in the first exposure.

Now for the big question: Do pixels record analog or digital values? The signal is analog (a voltage) but the source of the signal is photons (digital). If you have an analog signal and boost it, you get more resolution. If you have a digital signal and boost it, you just get more spacing between the numbers.

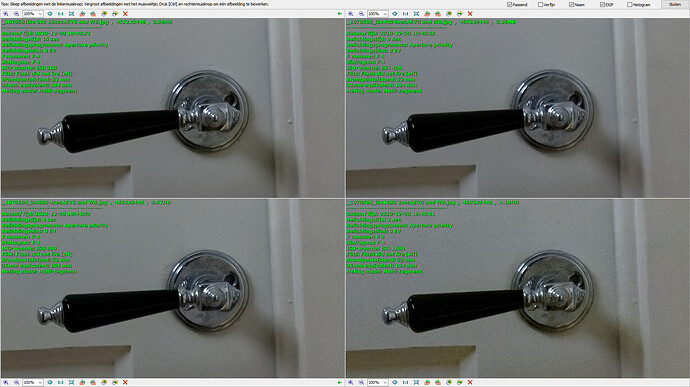

Let’s try an example. We have a 12-bit ADC (values range from 0 to 4095). We have three pixels that receive, say, 10,000, 10,100, and 10,200 photons. Let’s assume this translates to values 32, 32, and 33. In other words, the 12-bit resolution means that we can’t tell the difference between 10,000 and 10,100 photons.

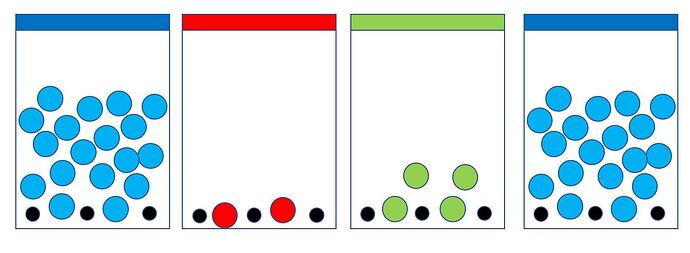

So you think: if I could just expand the values, I could separate these two shades. So you boost ISO one stop and reduce the shutter speed one stop. Now you have values, 5,000, 5,050 and, 5,100. The ISO boost turns them into 10,000, 10,100, and 10,200. You still get values 32, 32 and 33. But because you’ve reduced the amount of light, the image will be noisier.

Another approach: you leave the exposure the same, but boost the ISO one stop. Now you get values 10,000, 10,100, and 10,200 boosted to 20,000, 20,200, and 20,400 which result in the final recorded values of 64, 65, and 66. So, yes, now you’ve increased the resolution of the sensor, but clipped all the highlights.

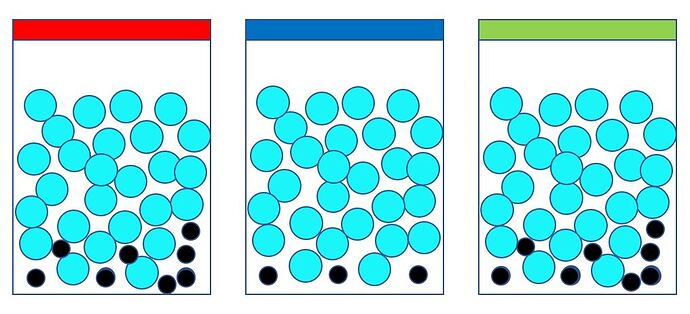

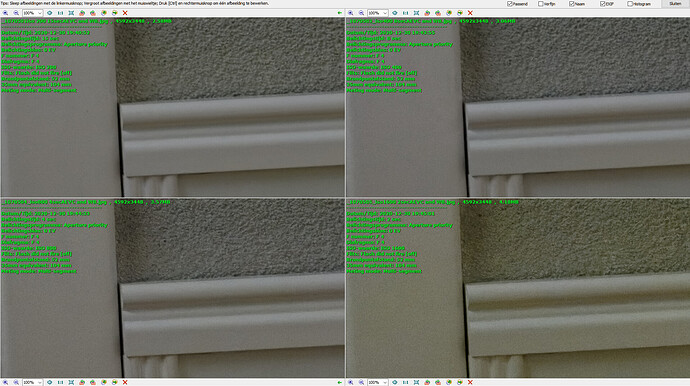

This assumes a perfect sensor. If a sensor’s noise floor is equal to a single ADC step, it can’t resolve the difference between 10,000 and 10,100 photons anyway. Boosting noise doesn’t get you anywhere.

Your best exposure comes from making sure any important highlights just fill a pixel’s well. You use ISO for cases where you can’t do that.

The right way to increase dynamic range is to buy a camera with a bigger ADC, although again this is limited by the capability of the sensor. Or you can use HDR if your subject is not moving.