My English is not the best English, to say it nicely. But what I kept writing was not different from what Wolf wrote.

As far as I know the main lines are the same for all raw converters.

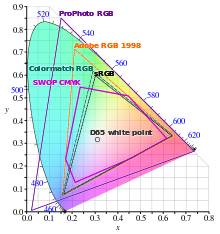

Color spaces are based on what the monitor can show, they are connected to each other. Look at the diagram. The main variable are the wave lengths of the light. Adding a color space to an digital image is taking care that that image has pixel values so that the monitor produces the right colors. I mentioned it before: a digital image doesn’t have colors, it’s the monitor that has colors

.

I also have my own questions about changing color spaces.

As you can see the sRGB colors are covered for 100% by the AdobeRGB colors. So it must be perfectly possible to show those colors in a AdobeRGB color space. On the other hand showing AdobeRGB colors in a sRGB color space will be inpossible when there are colors involved that are out side the sRGB color space. Then some solutions are used that always will alter the colors. Look for rendering intent.

So why using AdobeRGB? First of all there’re monitors,displays that can reproduce those colors.

Second is the practical use of AdobeRGB. When using a larger input gamut,range in wavelength, and divides that in the a/d converter in 255 pieces, then every piece in AdobeRGB will be larger then that of sRGB. The solution is to use a 16 bit division or 65535 pieces. And that’s where the profit in editing is coming from. It’s NOT due to the wider gamut.

Just my thoughts.Maybe I’ve to correct them.

George