I’ve been using PureRaw2 to process my Fuji X-H2 (40mp) raw files. Using a 5 year old computer Win10. 8GB nividia video card, but unfortunately can’t be upgraded. Each image takes about 40 seconds to process and create the output DNG file. When I photograph an event with 300 or more images, obviously that take a LONG time. I’ve used HP computers over the years, but looking at a Dell with the following video card: NVIDIA® GeForce RTX™ 3080, 10 GB, LHR

Will this card significantly improve the processing time per image? Other recommendations?

Thanks.

@coughlin47 , have you seen the threads and posts that deal with similar questions? They might be related to PhotoLab instead of PureRAW, but the essence should be the same, PureRAW being supposedly a subset of PhotoLab.

One very similar thread is Recommended laptop for DxO 6

A bit old maybe: Help needed choosing new computer

Use the forum’s search to find more.

@coughlin47 from a search it appears the PureRaw uses DeepPrime and there appears to be talk of DeepPrime XD being added? I use PhotoLab Elite so my experience is based upon that and have just upgraded from a GTX 1050 2MB to a RTX 3060 (retaining a 1050Ti on my other machine).

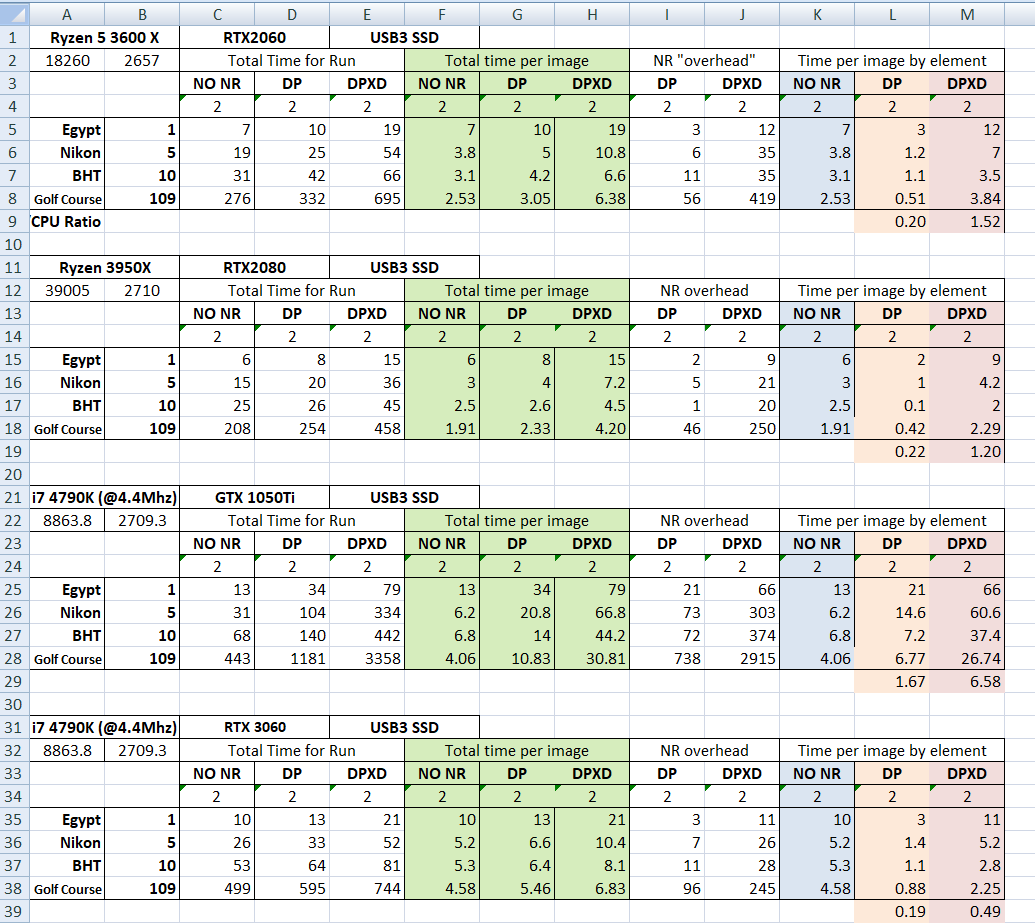

Before making that jump I tested some images on my Grandsons RTX 2060 in a Ryzen 3600X and my Son’s RTX 2080 in a Ryzen 3950X (possibly Ti but I am not sure) and put some test results here Which graphics card do you use with Photolab? - #27 by BHAYT and in other later posts.

The RTX 3080 should be a “beast” given the performance from my Son’s machine. However, there needs to be a balance between the graphics power for DP (and DP XD) and the processor power for the rest of the editing process and for feeding the graphics card which then does the “heavy lifting” for the Noise reduction.

I don’t now what prices the systems you are looking at are but given that a RTX 3080 sells for about £750 then providing there is enough in the budget to buy a reasonably powerful processor then you could either go for the 3080 or drop down a little and increase the power of the CPU, it really depends on how complex the editing of the images is.

If you run a test where you retain all the edits but remove the Noise reduction and then compare to the same test but with NR enabled you should be able to determine what proportion of the elapsed time is currently being spent in the two phases.

Although 5 years old the graphics card has 8GB memory so it might not be doing some useful work currently and you need to assess if your current processing approaches mine when the results from the other two machines and graphics cards might help determine which is the best route to reduce the 40 seconds to a more cost effective length.

In my tests the time for my 10 images was 6.6 times faster using my Grandson’s machine (than mine) and 9.8 times faster on my Son’s machine and the power and cost of one of those machines is very high, i.e. the graphics card cost of one probably exceeded the entire build cost of the other but that is not reflected in the performance difference.

There are processors faster than my Son’s machine but … and the 3080 should be faster still than his 2080(Ti?).

Your RAW images are over twice the size of mine so that is going to have an impact as well. Looking at the figures for my images the slower machine was processing each image in 6.6 seconds and the faster at 4.5 for DP XD. Doubling those times gives us at least 13.2 and 9 seconds per image or 1.1 hours and 45 minutes to process 300 images but I am outputting 100% JPGs in my tests and you are outputting DNGs and you may well have way more complex edits to apply.

I hope that this helps a bit.

Regards

Bryan

Thanks for the feedback. I’ll due my due diligence and search for similar questions.

Regards.

Thanks for your reply Bryan, much appreciated. Although I worked for over 40 years in IT, and still maintain a couple of websites for local non-profits, I’ve been out of touch with technological advances in computers … graphics… After a bit of research, and looking at various options, I did come across a Dell desktop with this graphics card: NVIDIA® GeForce RTX™ 3080, 10 GB, LHR

Another ‘gotcha’ is that I’ll probably need new hearing aids (probably to the tune of $6k) - if that’s the case, a new computer is definitely on my wish list for the future ![]() Again, thanks … and to others on this thread, I’ve learned a lot in the past 24 hours. Regards,

Again, thanks … and to others on this thread, I’ve learned a lot in the past 24 hours. Regards,

Bill

@coughlin47 (Bill) Sorry to hear that you might need new hearing aids which might put any upgrade in jeopardy.

If you have the internet speed to upload a Raw (I don’t!) and a typical DOP and a snapshot of your export settings I can certainly create a batch of images and run them through my old i7 4790K and my 3060 to see what PhotoLab makes of it.

I could download PureLab 2 trial and try the batch through there if you are interested.

I worked for the same computer manufacturer for a “mere” 36 years but that was after 4 years doing a computer degree (Year 3 was an industrial placement), 2 years research in the same department and then 3 years lecturing, you guessed it, in the same department, then I wondered what the “real” world looked like and went to work for Burroughs that became Unisys.

I believe that it should be possible to get a machine with a 2060 or 3060 for around the £1,000 mark which would be about $1,250 and that might just provide a realistic solution but is still a sizable chunk of money.

Take care

Bryan

My limited finding is GPU with Ray tracing capability (such as RTX) which has some AI type features for upscaling capabilities in gaming Ray Tracing will beat any CPU for speed and total power usage.

My 8 core Ryzen CPU gets all cores maxed out to TDP with good water cooling and it throttles down and back up to max capacity. It takes roughly 20 seconds to process vs RTX GPU that takes 6 seconds. The GPU fans are barely turning so it certainly appears to be way under TDP so it’s not likely drawing anywhere near 105W that the CPU is drawing at TDP. This is using the Deep Prime method, haven’t tested anything else.

If you multiply the CPU power usage by the more time it takes my feeling is the GPU running for less time is more energy efficient, though a higher initial cost outlay. If you are batch processing and can do something else whilst it processes then it’s not so much of an issue.

@rextal I have never proposed trying to replace a graphics card with the CPU.

What I have said is that the Google spreadsheet “distorts” the performance of the different graphics times in the sheet because it fails to split the time taken by DxPL into the two major elements, one of which is predominantly CPU and the other predominantly GPU.

My premise is that any configuration needs a balance between CPU and GPU “power” and that the “law of diminishing returns” affects both, i.e. there is a point where further expenditure on both will continue to yield an improvement but at considerably greater incremental cost.

For those that use the product for business purposes then every second counts but for the “hobbyists” amongst us then sacrificing a little time for cost savings probably (possibly) makes sense.

So we have

CPU:-

-

Normal editing and rendering while opening new or old directories and applying presets or modifying

editing options etc. -

The preliminary part of the export process, referred to as ‘NO NR’ in my spreadsheet below.

-

A small amount of involvement (I believe small) in managing the activities of the GPU when it is undertaking ‘DeepPrime’ and DeepPrime XD’ noise reduction.

GPU:-

-

A suggestion by a DxO software engineering that the GPU can be used in certain routine editing functions.

-

The noise reduction processing for both ‘DeepPrime’ and DeepPrime XD’.

The following spreadsheet comes from tests conducted on

- An i7 4790K running at 4.4Mhz equipped with a GTX1050Ti 4GB GPU

- Another i7 4790K running at 4.4Mhz equipped with an RTX 3060 12GB GPU (an upgrade from a GTX 1050 2GB GPU).

- My Grandson’s system, a Ryzen 5 3600X with an RTX 2060

- My Son’s system running, a Ryzen 3950X with an RTX 2080

The spreadsheet shows the huge difference that the RTX 3060 makes compared to the GTX 1050Ti (and it was even greater compared to the GTX1050 2GB card).

But while the Google spreadsheet might suggest that I should buy an even more powerful graphics card my spreadsheet shows that if the card was capable of reducing the ‘NR’ time to 0 I would still be left with the ‘No NR’ time, i.e. the Google spreadsheet is “masking” reality.

With my “Golf course” images the ‘No NR’ time is 499 seconds on my i7, but 276 seconds on a Ryzen 3600X and 208 seconds on the, even more powerful, Ryzen 3950X whereas a 0 ‘DP XD’ time would only reduce the overall time by a further 245 seconds after the installation of the RTX 3060 card.

So if I can reduce the ‘No NR’ time to the same figure as achieved on the 3600X, I am going to save 233 seconds, and going to a 3950X would save a total of 291 seconds but only an additional 58 seconds better than the slower and much cheaper 3600X for 109 images!

So rather than seeking to improve the ‘NR’ times I have chosen to “attack” the ‘NO NR’ time and to that ends I currently have the following on order

- A Ryzen 5600G processor (I prefer having some independent graphics capability even though this is slower than the, slightly more expensive, 5600X)

- An MSI 550 Tomahawk motherboard (my Son selected the MSI 450 Tomahawk boards for their systems after research and determined that the VRMs were considered amongst the best.) The 550 board I have chosen is considered capable of handling the Ryzen 5900 overclocked, so I have headroom for an upgrade.

- 2 x 16GB memory (leaving 2 slots available)

I ordered this for £350 (there was a £20 discount for new “subscribers” ordering over £250 of equipment), just a little more expensive than the RTX3060.

When (if) it arrives then I will update the spreadsheet accordingly once I have the new machine running.

The Ryzen 5900 CPU was on sale for £334, with a second hand one from a supplier I have bought my last 4 HDDs from for £250 but I decided to keep my budget under more control for now.

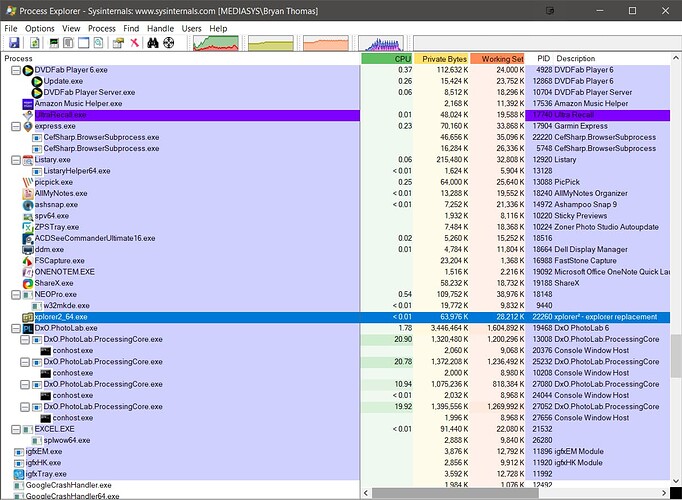

Please note that the tests I have not run, on the Ryzen 3950X in particular, is one where the number of simultaneous exports is increased, e.g. to 4 or more. On my i7s running with 4 copies of DxO.PhotoLab.ProcessingCore.exe competing for resources obviously causes some interference but with a faster CPU (and more powerful GPU?) it might be possible to increase the number to 4 and beyond and achieve even better times?

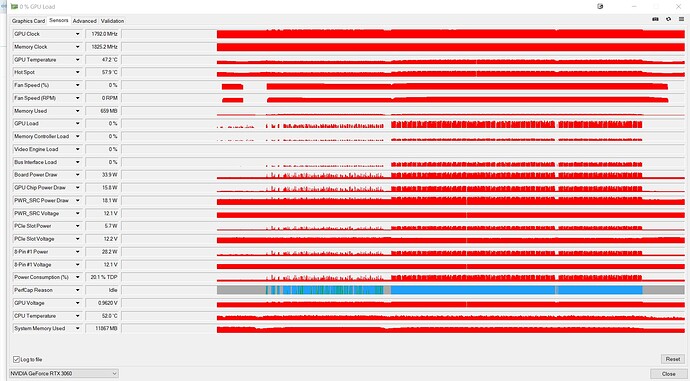

Running a batch of 50 x ‘NO NR’ followed by 50 x ‘DP’ followed by 50 x ‘DP XD’ produced the following from GPU Z.

I believe that the tests were of 50 Nikon images, i.e. I have taken the 1 Egypt test image and copied it 50 times, copied the 5 Nikon tests images and copied them 10 times and now need to copy the 10 “BHT” images 5 times and reduce the Golf Course" image to 50 to create 4 long(ish) test groups.