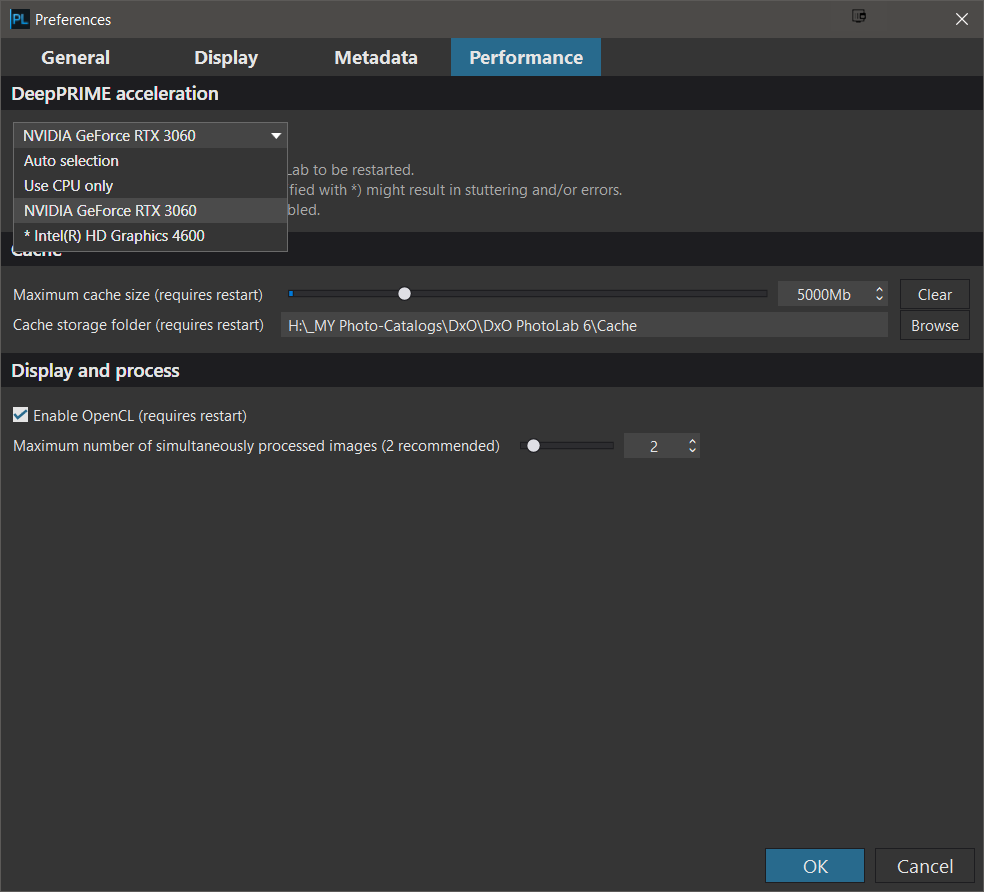

@RvL I believe that this is the case and the Tensor cores (in connection with the Nvidia cards) has been mentioned from time to time.

So the processing is done by

- CPU to “develop” and apply edits to the image

- CPU to handle task scheduling and communication with the GPU essentially for the elements relating to DP and DP XD processing

- GPU elements to handle denoising as per the DP and DP XD AI model, under the control of the CPU

- CPU to output the final image to the designated device and move onto the next image etc…

Without an approved GPU all noise processing. i.e. PRIME, DP and DP XD will use the CPU and the timings are big, i.e. the process is slow. With an approved GPU the times of DP and DP XD will be reduced in line with the “power” of the GPU.

But increasing the power of the GPU will “only” improve the performance of the Noise reduction elements of the total processing. With a monster card like the 4080 you might be able to reduce the noise reduction element to close to 0 but you will still be left with the rest of the work to be done by the CPU.

However you are running a Ryzen 3900 (passmark = 30602, 2600) so your time to process is pretty close to the best you can achieve without spending large amounts of money, which an RTX 4080 will consume, i.e. your GPU time will be close to 0 possibly along with your bank balance.

If you are interested I rans some benchmarks on my machines and my Son’s and Grandson’s and the figures are here Which graphics card do you use with Photolab? - #27 by BHAYT

My son’s processor is a Ryzen 3950X which is a bit faster than yours (39012 2710) and my Grandson’s a Ryzen 5 3600X just over half the speed (17795 2657) and I have an even older i7 4790K which is a fraction of the speed with pass mark scores of (8058 2463).

We ran the Egypt and Nikon tests from the spreadsheet alongside 10 images of mine while I was trying to convince myself to buy a new graphics card, my Son’s GPU is an RTX 2080 (Ti?) and Grandson’s is an RTX 2060 and mine is now an RTX 3060.

So please run a test sequence the Egypt test, the Nikon test and 5 or 10 images of your own with “typical” edits

- With DP enabled

- With DP XD enabled

- With NR completely disabled

The time difference (1 - 3) and (2 - 3) is the portion of time attributed to the Noise reduction element of the processing and will contain a lot of GPU activity and some CPU activity.

That is the (only) element that you could improve with a faster GPU or so I believe.

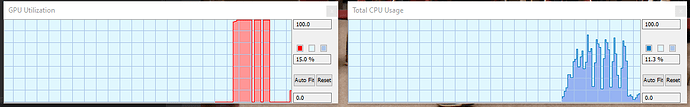

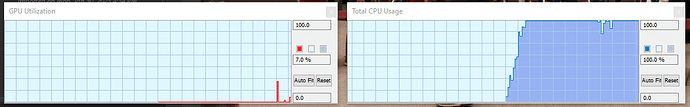

Why you are seeing such little activity I cannot explain but am trying to get statistics from my own system to help my understanding.

Unfortunately there is no-one from DxO on the forum any more so we have no-one to ask except each other.