I never said that. That’s absolutely not true.

It’s 3 channels of numbers. R, G, B data per pixel. Normally in the same colour space as the raw sensor data was. You might call that ‘unknown profile’ or whatever.

If a raw file had the value 25% for red somewhere, the linear DNG will have 25% for red in the same spot. Whatever that 25% means for colour.

If a raw file had no value for red at a certain pixel (because that is what happens, there are ‘gaps’ so to speak), that value will be filled in / demosaiced in the linear DNG file.

Want to get technical? Get a bayer raw file.

_DSC1309.ARW (47.0 MB)

I’ll use this. It has clipped skies, so I know the white-level easily  .

.

Load it into DxO PL5 and set it to ‘no correction’. Or load it into PL6, set it to ‘no correction’ and set the gamut to ‘legacy’.

Export it as DNG with ‘optical corrections only’.

Now, we’re going to extract the 4 raw channels from the bayer file (original raw file). With the 4channels tool from the libraw project, you extract the raw numbers that are inside the raw file, written as R, G1, G2 and B.

4channels _DSC1309.ARW

It creates files such as _dsc1309.arw.R.tiff.

It is not orientated right (needs to be turned left). Also, it’s something like 2012x3012 pixels. That’s because raw converters often crop out the edges for the demosaicing.

Also, its black level is corrected by 4 channels, but the max is just whatever my sensor can produce as maximum readout. Let’s see what it is with ImageMagick:

magick _dsc1309.arw.R.tiff -format "%[max]" info:

It reports a maximum value of 15864. Since all channels are clipped in this file, this is also the maximum value my sensor can produce. I want to scale it between 0 and 65535 instead of 0 and 15864.

65535 / 15864 = 4.131051437216338880484114977. So we multiply every value with 4.131051437216338880484114977 to get it to sit between 0 and 65535.

So, we’re going to multiply it, rotate it left, crop it to 2000x3000 in the middle. And save it as a separate tif file.

magick _dsc1309.arw.R.tiff -evaluate multiply 4.131051437216338880484114977 -rotate -90 -gravity center -crop 2000x3000+0+0 +repage -compress none from_raw.tif

Right… now let’s look at the DNG.

Use dcraw_emu from the libraw project to ‘render’ the DNG.

BUT…

- we don’t want to apply any white balance multipliers (

-r 1 1 1 1)

- we don’t want to apply any camera matrix (

-M)

- we don’t want to convert it to any other profile whatever (

-o 0)

and we want to write it without gamma correction to a 16bit tiff (-T -4)

dcraw_emu -T -4 -o 0 -r 1 1 1 1 -M _DSC1309.dng

You could look at this file now. It’ll probably look pretty green. It’s the raw numbers from the bayer channels, done nothing to it except let them sit between 0 and 65535. You’re looking at numbers, not colours, so to speak.

We take that file, take only the red channel, and average it down to 50%. This returns it to 2000x3000.

magick _DSC1309.dng.tiff -channel R -separate -scale 50% -compress none from_dng.tif

Now, compare those files. Name me noteworthy differences. Because in my test file here, they are (exactly) the same.

Look at the statistics of the numbers, like the mean:

magick from_arw.tif -format "%[mean]" info:

magick from_dng.tif -format "%[mean]" info:

7036.49 for one, 7059.74 for the other. To take that into perspective, if the numbers would be between 0 and 255, the difference would be less than 1. So… the data is still the same.

In other words, I declare the set of numbers to be identical, within the margin of error. Even the demosaicing algorithm used by DxO did so very little to the numbers that the mean doesn’t really change.

The data in a linear DNG files are (supposed to be) the same numbers as in your raw file, but ‘with the gaps filled in’. That’s demosaiced. Nothing done, nothing converted, nothing ‘colour space’, nothing ‘profile’… demosaicing is just interpolating the missing data from a bayer or x-trans sensor. Not modifying that data.

This is needed, so that lots of tools can read that data, and handle the numbers exactly the same as they would handle the numbers from a real RAW file, except the demosacing step.

This is all different to a ‘non-linear DNG’ (which DxO can’t write, but is what Adobe DNG converter makes for instance), which contains the true bayer data from your raw file, leaving even the gaps in. That’s why it doesn’t increase in size, but DxO’s DNG do: They interpolate data, they ‘fill in the gaps’ which are then written to file, growing the file bigger.

DxO has been doing this correctly for years. Which is awesome! It creates a workflow that others try, but can’t really seem to recreate. DxO even created PureRaw as a cheaper product just to embrace this workflow.

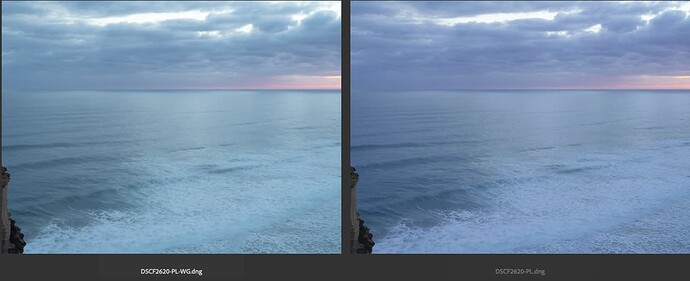

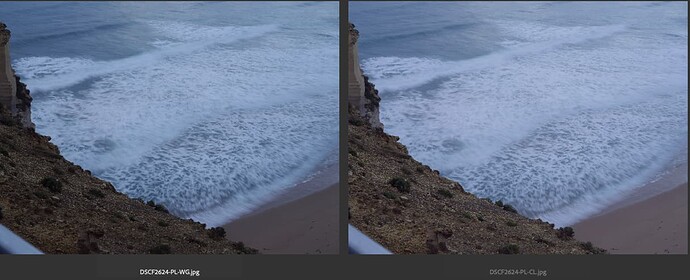

Now DxO Pl6-wide-gamut has changed this… and I don’t think it was meant to be that way.